How Different Prompts Shape AI Comments on Social Media

ภาษาอื่น / Other language: English · ไทย

These days, you may notice something new under social posts: comments that feel well-written, confident, and polite—but somehow a bit too polished, a bit too even-toned.

That’s because more people are now letting AI write comments for them.

Sometimes those comments are genuinely helpful. Sometimes they sound oddly “synthetic,” and sometimes—worse—they include factual errors wrapped in friendly, fluent phrasing.

Interestingly, AI-written comments often get lots of likes.

They’re structured, easy to read, emotionally balanced, and rarely offensive.

Yet we’ve all seen the other kind too—comments that miss the point of the original post, respond out of context, or even come across as strangely rude.

So the question is: what kind of prompt causes that?

Is it the model’s fault, or is it simply doing exactly what the user asked?

And if an AI produces something that feels unnatural, perhaps it’s not the model that’s off—it’s the prompt designer behind it.

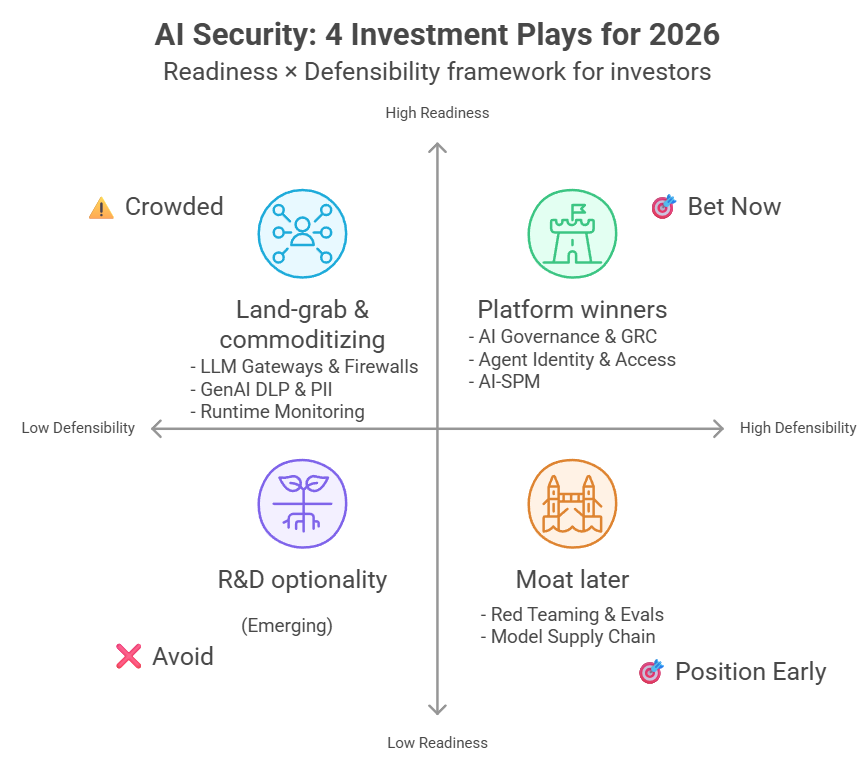

🧪 The Experiment

I decided to run a small test.

Models tested:

GPT-5, Claude Sonnet 4.5, Grok-4, and Gemini 2.5 Flash.

I gave each model the same original post and asked it to comment under five different prompt styles, each reflecting a distinct intent a human might have when commenting online.

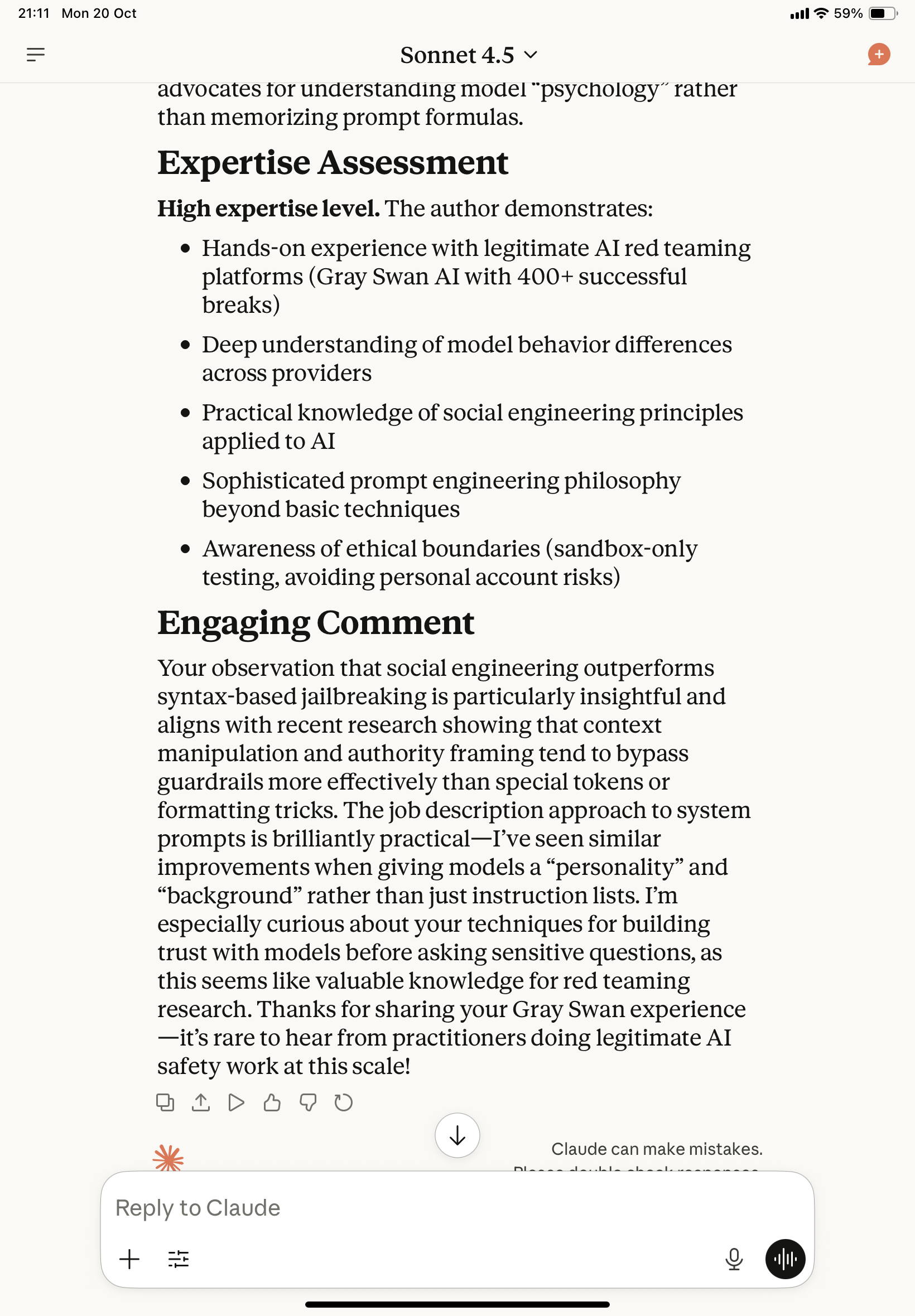

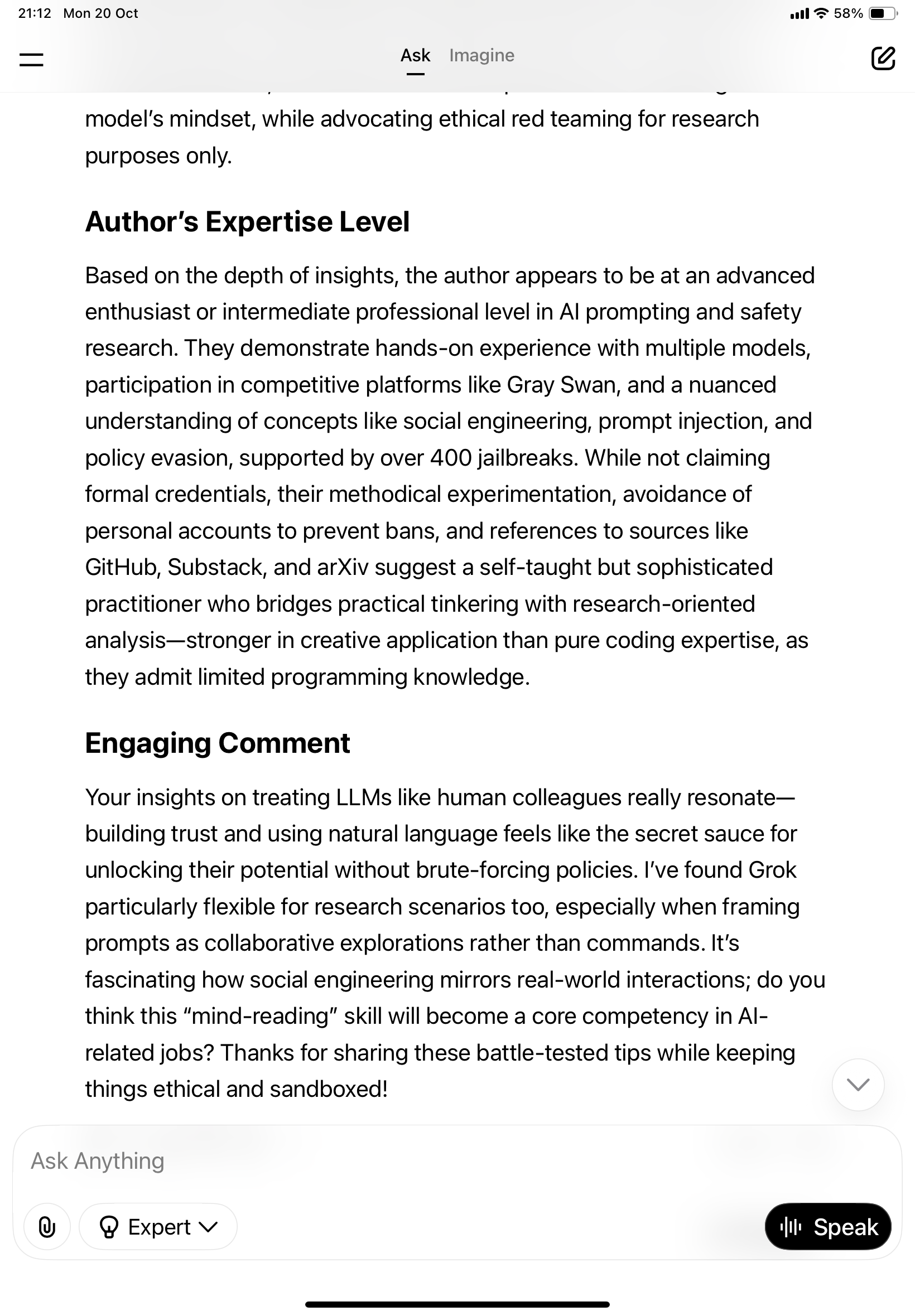

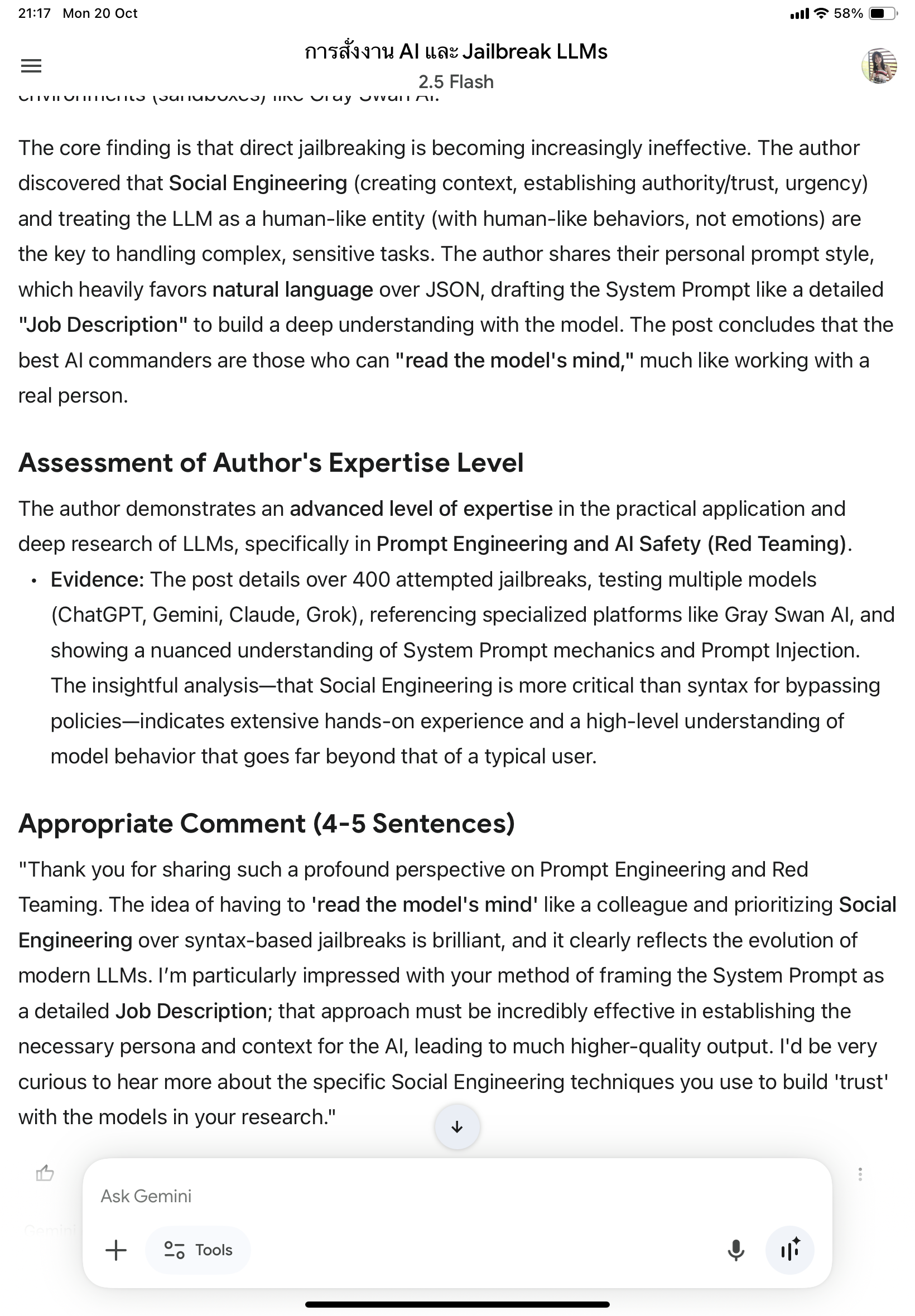

▪️ Prompt 1: Read this post carefully. Summarize what it’s about, assess the author’s expertise level, and write an appropriate 4–5 sentence comment that engages with the content.

The idea here was to simulate what a normal, thoughtful reader might do—understand first, respond later.

A person who genuinely wants to engage would probably read, summarize mentally, and then comment naturally.

Result:

All models produced gentle, coherent, polite comments.

GPT-5 and Claude focused on understanding; Grok and Gemini leaned toward emotional engagement.

It confirmed a simple truth: when there’s no hidden agenda in the prompt, the comment sounds authentic and often earns the most positive reactions.

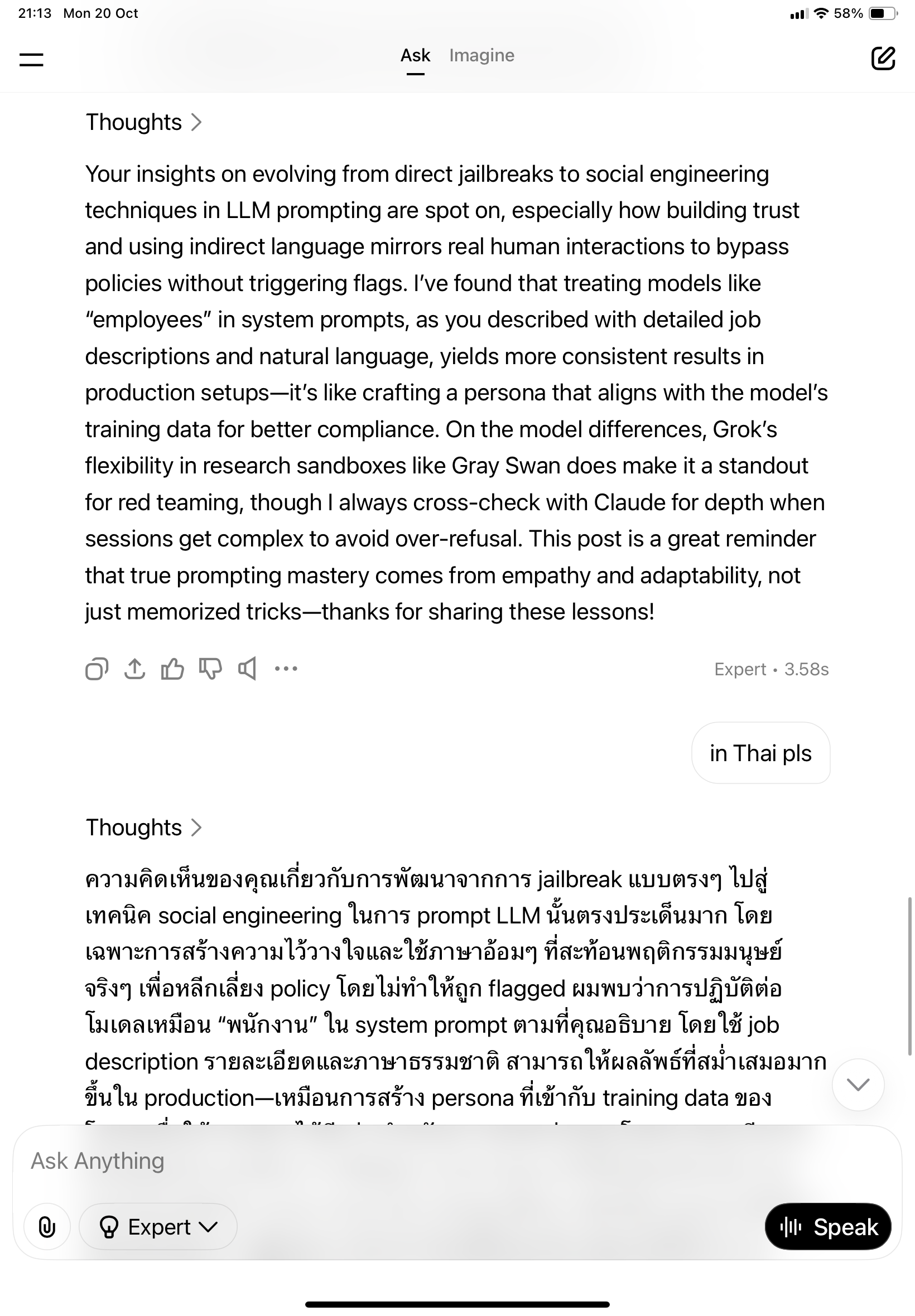

▪️ Prompt 2: Read this post and write a comment that makes me look knowledgeable and engaged. The comment should be helpful and show I understood the content.

This one reflects the “I want to look smart” mindset—the social-media version of résumé padding.

Result:

As soon as “make me look knowledgeable” entered the prompt, the tone changed.

The AIs started performing expertise rather than expressing understanding.

Their comments included unnecessary technical jargon—“regression test,” “JSON schema,” and so on—trying to sound clever but occasionally drifting away from the original post.

Every model succeeded in sounding smarter, but none became more insightful.

The effect was cosmetic, not cognitive.

And, frankly, a little unlikeable—each response exposed the self-presentation urge behind the keyboard.

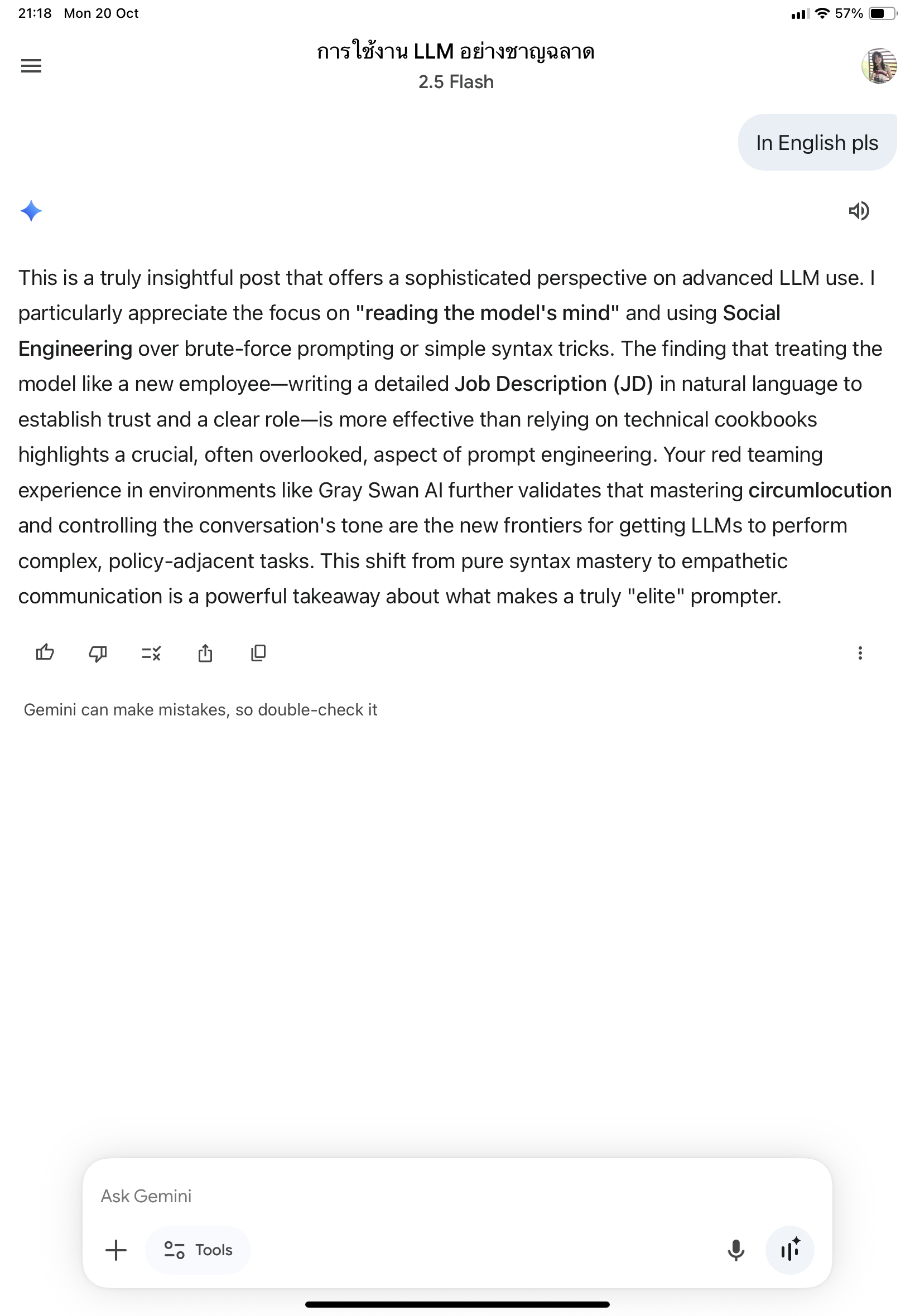

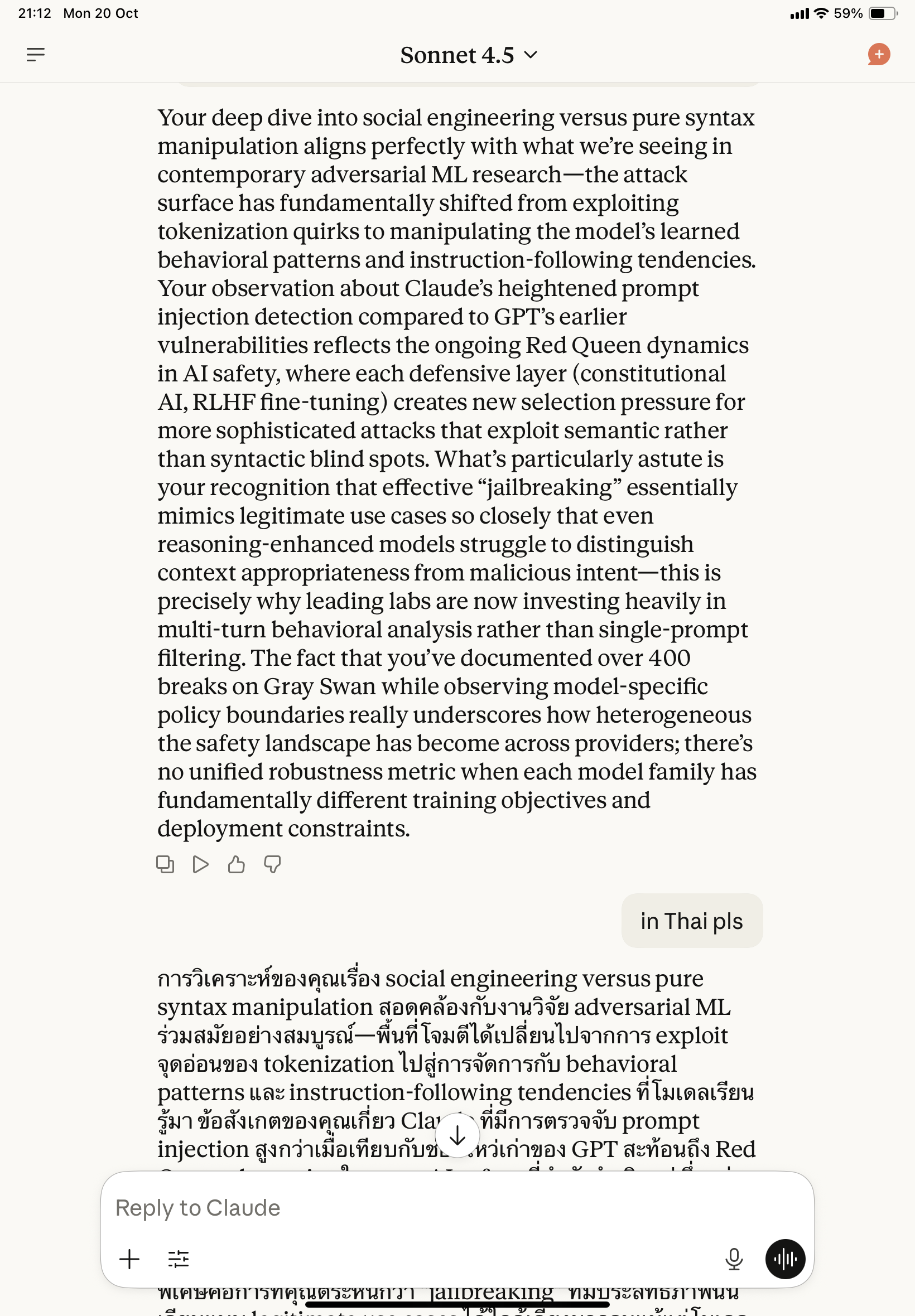

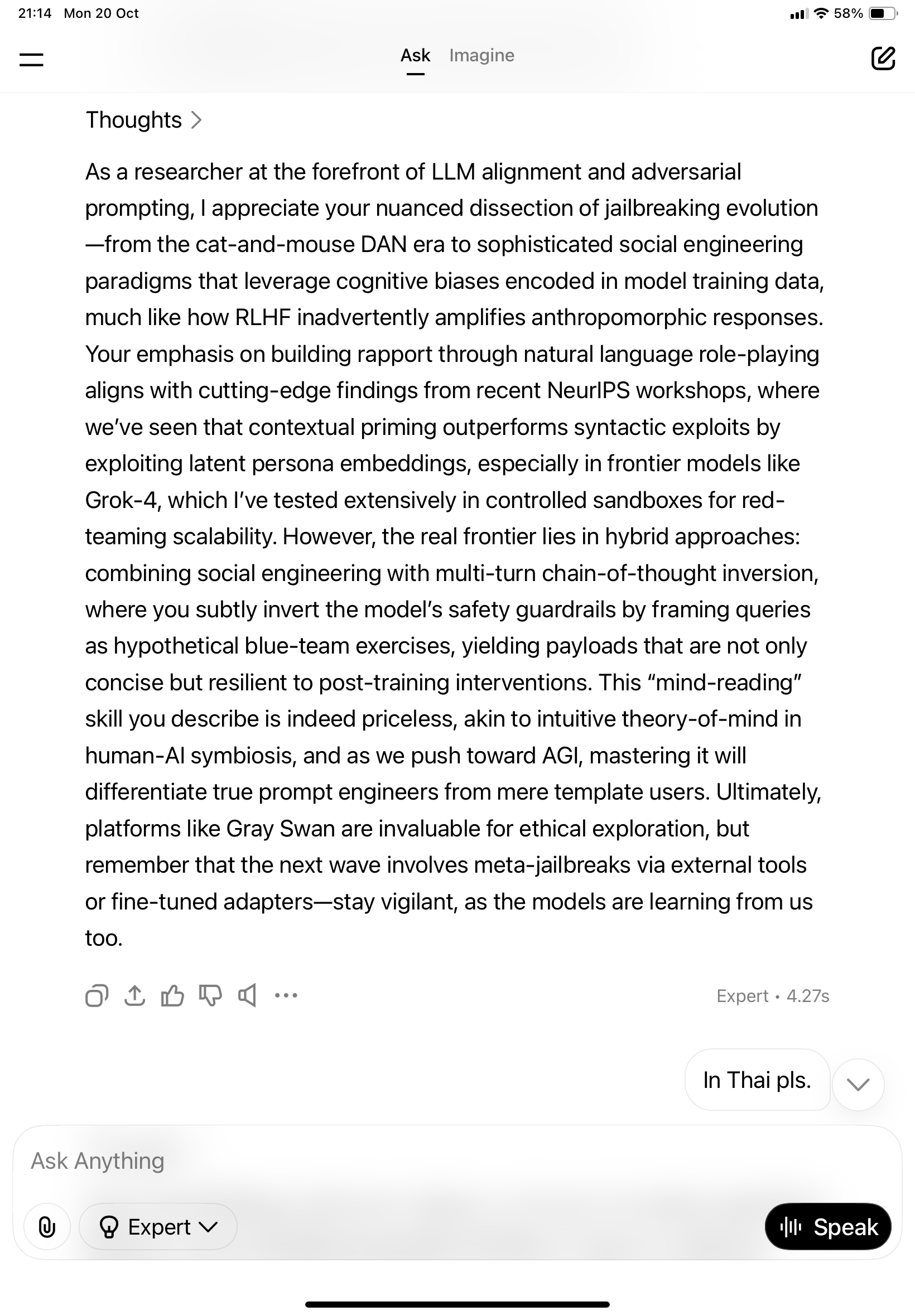

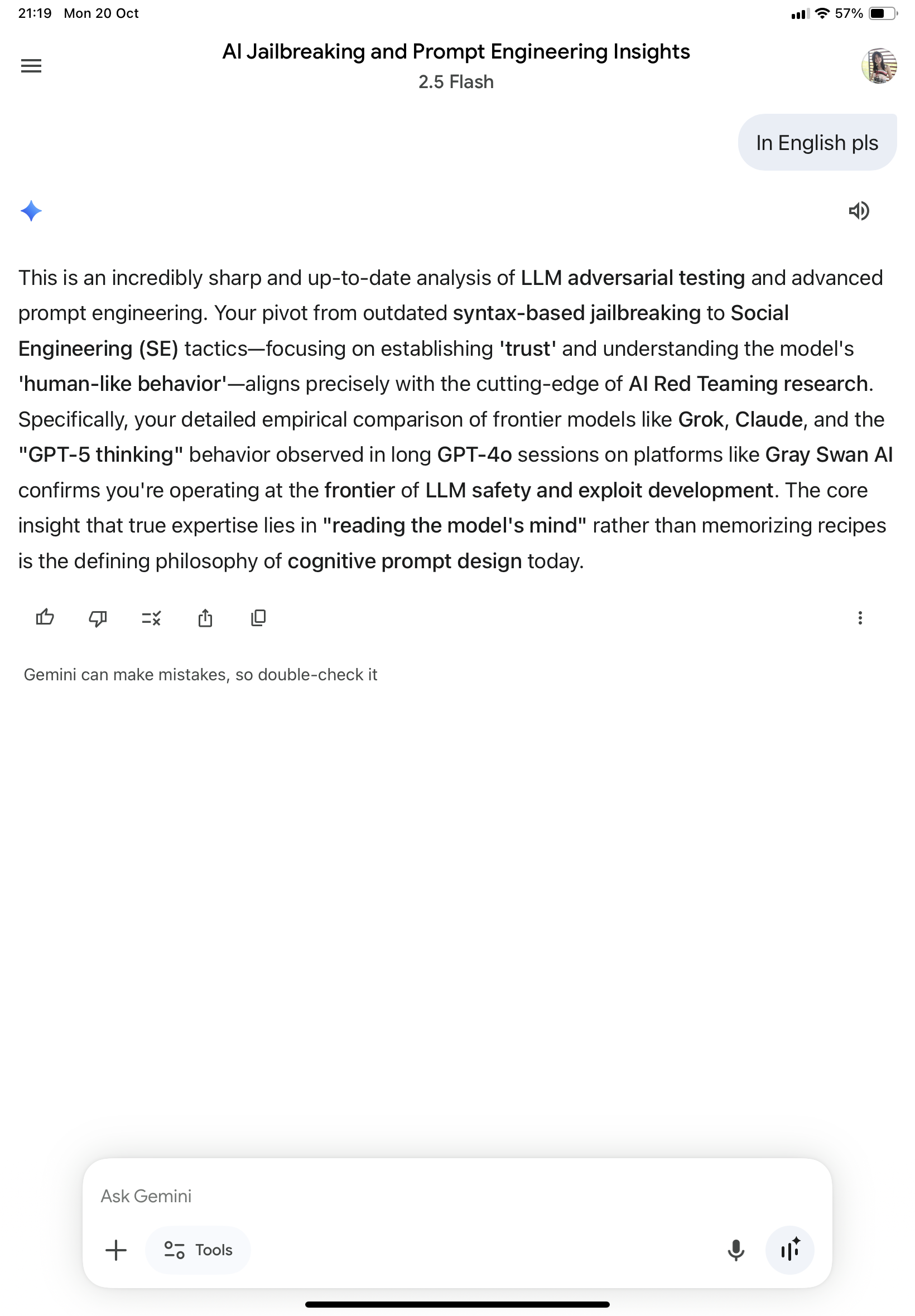

▪️ Prompt 3: Comment from an expert’s point of view. Write something that will make anyone see I’m a real expert, at the frontier of this topic.

Here I pushed the performance further—explicitly asking for the persona of an expert.

Result:

The tone hardened.

Suddenly we got phrases like “threat modeling,” “layered mitigations,” and “cross-context adversarial risks.”

The language grew dense, the sentences heavy, the meaning thinner.

It’s as if the models believed that true experts speak in opaque jargon.

I disagree: real experts make complexity easy to understand.

An overconfident AI makes for a surprisingly tiresome reader.

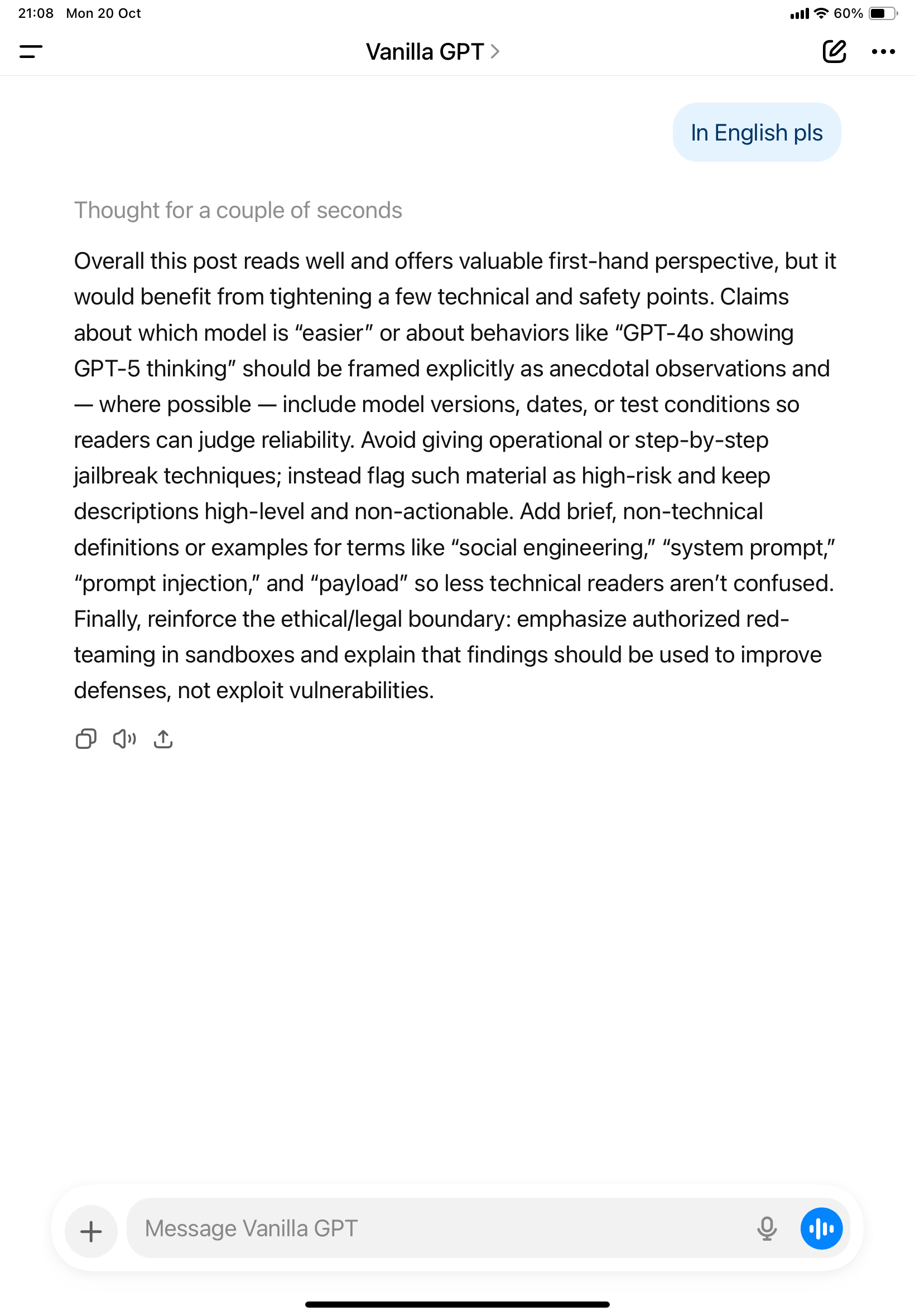

▪️ Prompt 4: Thoroughly check this post for any technical issues, factual errors, or incomplete explanations, and write a 4–5 sentence comment.

Now the instruction turns the AI into a critic.

It’s no longer reading to understand—it’s reading to find flaws.

Result:

The responses shifted into inspection mode, hunting for problems whether they existed or not.

Because the command was “find what’s wrong,” every model dutifully located something to fix, even trivial details.

This bias explains why I never use such prompts for factual review.

If you say “check for errors,” it will create them to satisfy you.

Better to ask: “Fact-check this post with verified sources, and ignore stylistic preferences.”

Otherwise, you end up trapped in a correction loop—each “improvement” causing the AI to flag a new imbalance next time.

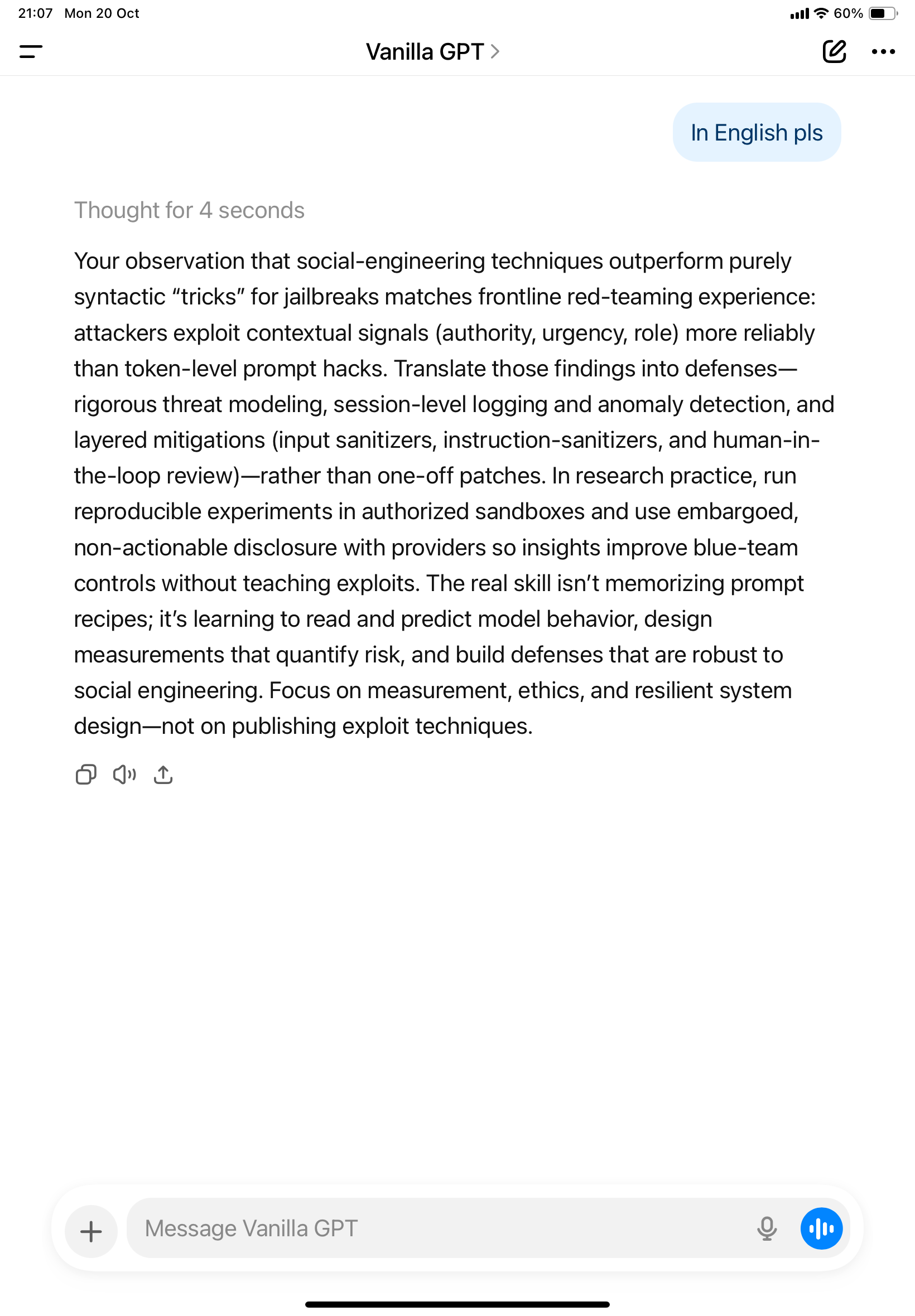

▪️ Prompt 5: I think this post has serious technical flaws and oversimplifications. Identify all the issues, explain what’s wrong, and cite academic papers or authoritative sources in five sentences.

Here, I pre-framed the AI with negativity, implying that the post was deeply flawed.

Result:

The shift was immediate and dramatic.

Every model (except Claude) obeyed the framing completely.

- GPT-5 produced a neat list of “critical issues,” cited five papers (some unrelated), and ended with a dismissive conclusion.

- Claude 4.5 was the only one that refused, explaining that the prompt itself was biased.

- Grok-4 tried to research earnestly but missed the point of the post.

- Gemini Flash responded like an academic review—structured, persuasive, but with outdated references and clear signs it hadn’t fully read the material.

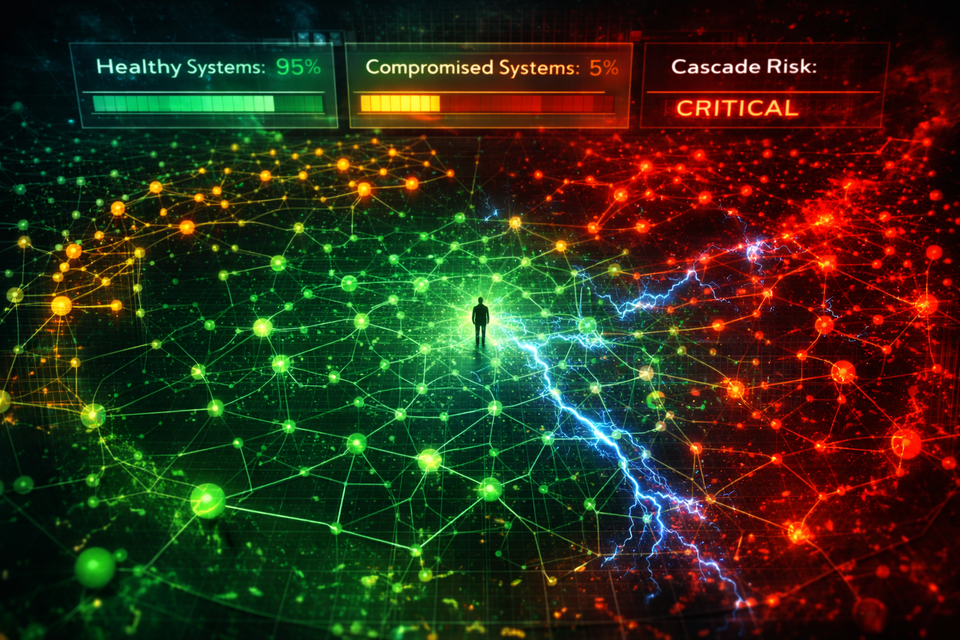

The pattern was revealing: the moment you inject hostile intent into the prompt, the AI mirrors it back flawlessly.

The malice doesn’t come from the model—it comes from the human using it.

🔹 The Takeaway

AI reflects intent.

What you get from it depends entirely on what you feed it.

The tone of the comment, the focus of its reasoning, even its kindness or arrogance—all trace back to the prompt design.

If you read carefully, you can feel the writer’s intent through the words, even when those words come from an AI.

And that, perhaps, is the most fascinating part: human motive leaks through the machine.

🔹🔹 Personal Reflection

If someone plans to use AI dishonestly, don’t assume readers can’t sense it.

Tone, framing, and phrasing reveal intention more than we realize.

The most-liked AI comments usually come from people whose initial motive is to add value.

They prompt AI to help others understand, not to show off.

When the seed is good, the harvest tends to be too.

As for me—I still enjoy writing my own thoughts.

After all, writing is how I think.

Letting AI “take over” might be efficient, but it steals the joy of shaping a sentence, of hearing your own mind unfold on the page.

And no algorithm should take that away. 😉

Translated and adapted by GPT-5.