A History of Bot Trading: From DOT to LLMs

ภาษาอื่น / Other language: English · ไทย

This week I’m switching to finance—drawing from a personal project to build an AI portfolio manager. Think of this as a light, narrative tour: not only a history of technology, but an evolution of financial thinking.

🔹 Origins: when machines started sending orders (1970s–1980s)

1976—NYSE launches DOT (Designated Order Turnaround).

Early on it was just an electronic order pipe: routing orders to the floor in seconds instead of minutes—fax to email.

If computers can execute instructions accurately, why not let them decide with rules?

That question birthed program trading: “If price drops 5%, sell index futures to hedge.”

The movement took cues from Black–Scholes (published 1973), which linked five drivers of option value:

- Current price

- Strike price

- Time to maturity

- Interest rate

- Volatility

The upshot: a rational “fair value” calculator for options. If we can hedge options, maybe we can hedge entire portfolios.

1984—SuperDOT arrives; throughput jumps.

Oct 19, 1987—Black Monday.

“Portfolio insurance” and program trading amplified a selloff into a feedback loop:

- Market dips → funds sell futures to hedge

- Futures fall → arbitrageurs sell cash equities

- Equities fall more → funds add hedges

- Spiral until liquidity vanishes

Other macro worries (valuations, rising rates, trade frictions, fragile sentiment) formed the backdrop, but automation synchronized behavior and sped up the slide.

🔹 When machines learned the market’s “language” (1990s)

Post-’87, trust was shaken—but tech marched on.

ECNs (e.g., Instinet’s revival in the 1990s, Archipelago 1996) enabled direct electronic matching and DMA.

Decimalization (2001) replaced fractions (1/8, 1/16) with cents (…50.00 → 50.01 → 50.02).

A small UI change, a huge market-microstructure shift: spreads collapsed, and software harvested tiny edges faster than humans.

HFT’s rise was not decimalization alone; it was decimalization + Reg NMS + faster infrastructure.

🔹 The speed race (2000s)

Global trading firms co-located servers at exchanges—latency in microseconds.

A one-microsecond edge compounded over tens of millions of shares is structural advantage. Firms even paid for slightly shorter cables. A dedicated Chicago–NY fiber cut ~3 ms—for hundreds of millions of dollars.

Reg NMS (2005; fully implemented 2007) mandated routing to the NBBO (best displayed price).

Unintended consequence: latency arbitrage—profit from tiny timing gaps between venues.

Example: NASDAQ ticks from 100.00 to 100.01 while NYSE still shows 100.00. A fast bot buys at NYSE, sells at NASDAQ. $0.01 per share looks trivial—until you do it millions of times a day.

Reg NMS also encouraged venue fragmentation (50+ lit/dark pools), quote stuffing, and maker–taker fee games. Competition shifted from information and analysis to speed and plumbing—and HFT became the market’s heartbeat.

May 2010—Flash Crash.

A mutual fund’s algorithm sold E-Mini S&P 500 futures at scale without pacing limits. With fragmented structure + unlimited algos + HFTs pulling liquidity, the market briefly became a vacuum.

Some stocks printed at $0.01, others at five figures. Circuit breakers and LULD were later added to blunt such cascades.

For the vibe of that era, Flash Boys captures it well.

🔹 When everyone got a bot (2010s–2020s)

What began as a hedge-fund edge went mainstream.

MetaTrader 4 (2005) let retail traders code EAs (Expert Advisors) for FX. Great rules = 24/7 helper. Bad rules = disciplined losses.

Crypto’s 24/7 markets made bots feel less optional and more necessary.

🔹 When bots started to understand our language

LLMs (GPT, Claude) are being tested for news/text analysis and sentiment, but live autonomous trading remains risky.

Some pilots read earnings call transcripts to infer management confidence. BloombergGPT focuses on internal analysis/search; FinBERT is a sentiment model—neither is a plug-and-play trading bot.

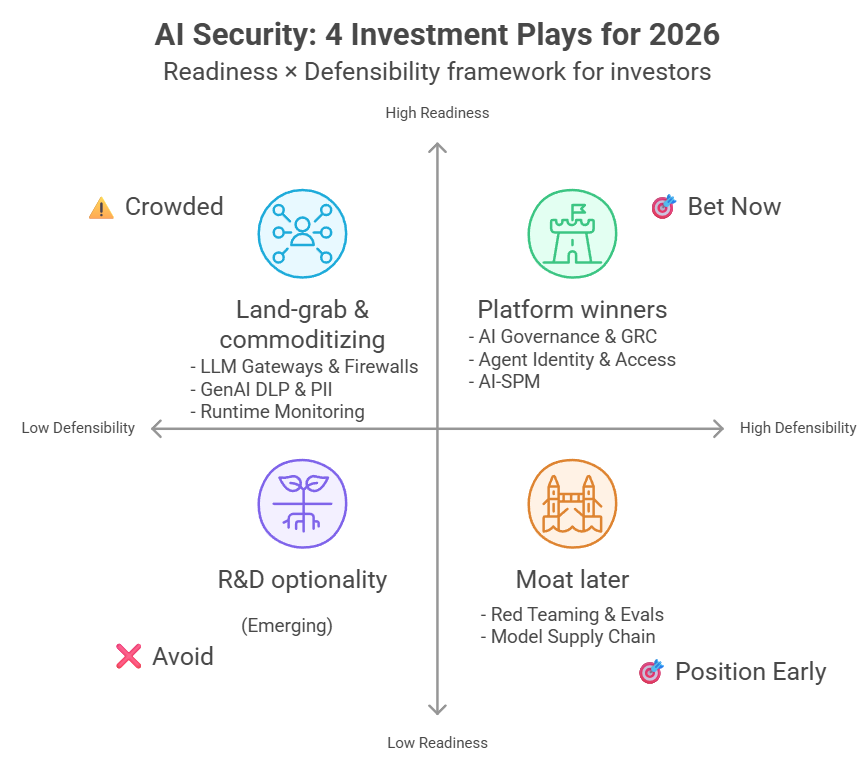

For fully automated LLM trading, three unresolved risks dominate:

- Prompt injection — hidden instructions can hijack behavior.

- Hallucination — models assert fabricated “facts” with high confidence.

- Lack of explainability — opaque reasoning vs. classical ML with tractable features.

In finance, one misread can cost millions. Today, production trading AI remains mostly specialized ML (tree models, domain-tuned neural nets). LLMs are best used as co-pilots (sentiment review, summaries, compliance checks), not autopilots that click the button.

🔹 Personal view

From DOT (1976) to LLMs (2025), tech sprinted—and so did risk.

Black Monday, the Flash Crash, and other crises remind us: machine cleverness can create new failure modes—especially when everyone is clever the same way.

We can’t go back to shouting on the floor. For my AI portfolio-manager project, the path is “co-pilot with guardrails”: analyze, rank risks, and enforce constraints—rather than free-running execution.

Learn fast. Know how it works. Know where the risk hides.

In markets where machines trade faster than humans can think, the survivors understand the game better— not just play it faster.

Translated by GPT-5.