Mapping the 2026 AI Security Market: Who's Winning, Who's Being Absorbed, and What Gaps Remain Open

ภาษาอื่น / Other language: English · ไทย

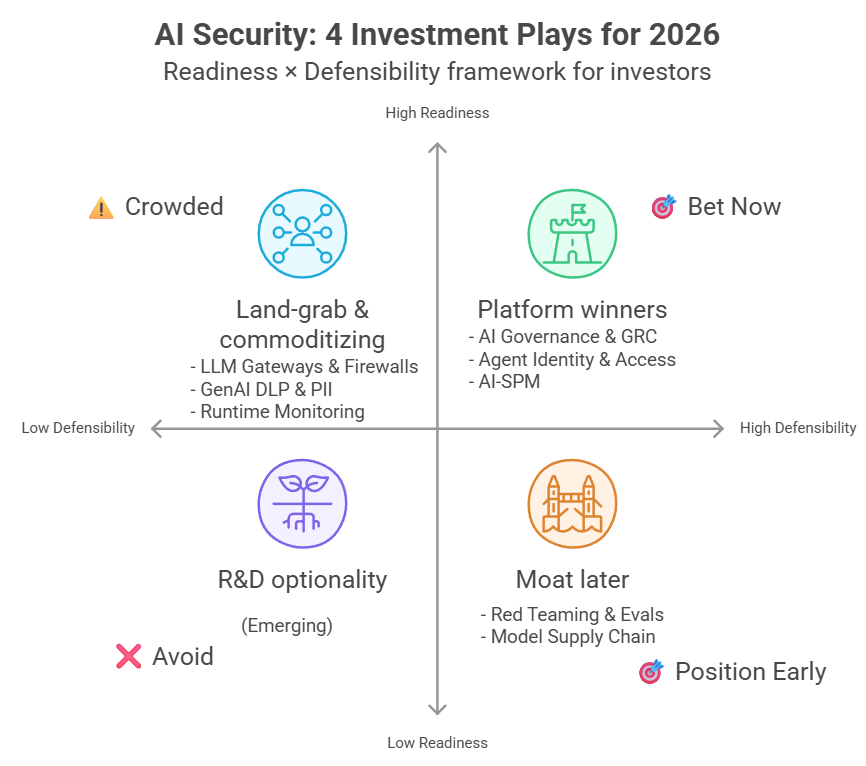

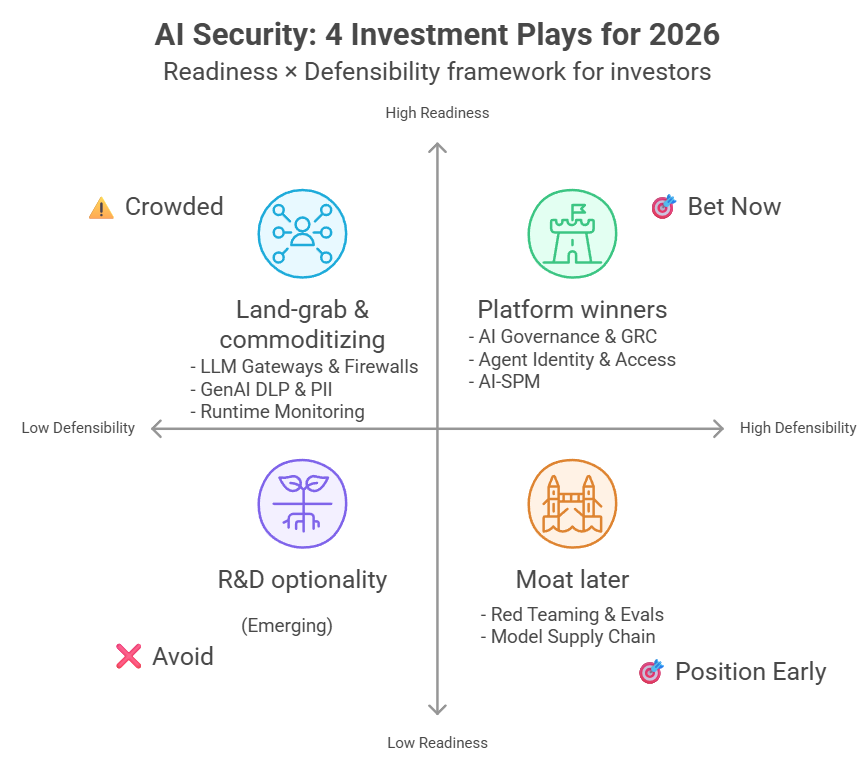

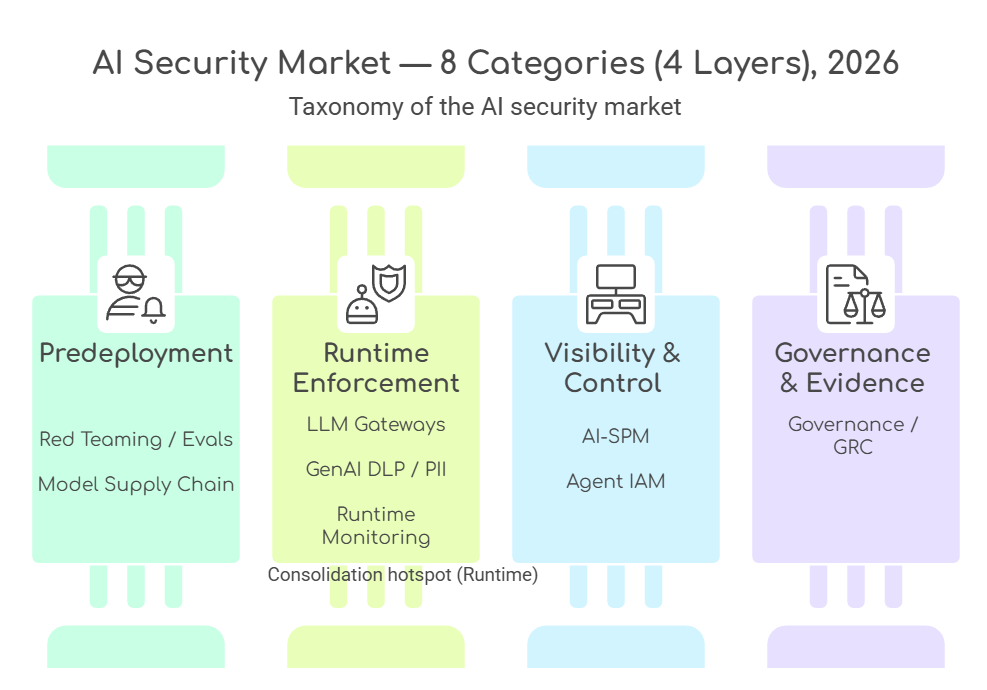

An early 2026 analysis of the AI Security market through an investor lens: where is the market heading, which categories are winning, what will become commoditized, and where would you place your bets today?

What is AI Security? And Why It’s a Multi-Layer Market, Not a Single Product

Before diving into details, let’s establish a shared baseline.

When organizations deploy AI or LLMs (Large Language Models like ChatGPT, Claude, and Gemini) in production, whether for customer-facing chatbots, document summarization, coding copilots, or autonomous agents, new risk classes emerge.

Some of these risks are fundamentally different from what traditional security systems were designed to handle.

Common examples include employees unintentionally entering confidential information into chat interfaces; attackers embedding malicious instructions in documents or retrieved data that the model ingests (indirect prompt injection); model artifacts from open-source sources carrying backdoors or malicious payloads (including risks from unsafe serialization and deserialization); or AI agents with access to critical systems being manipulated into taking actions outside their intended authorization.

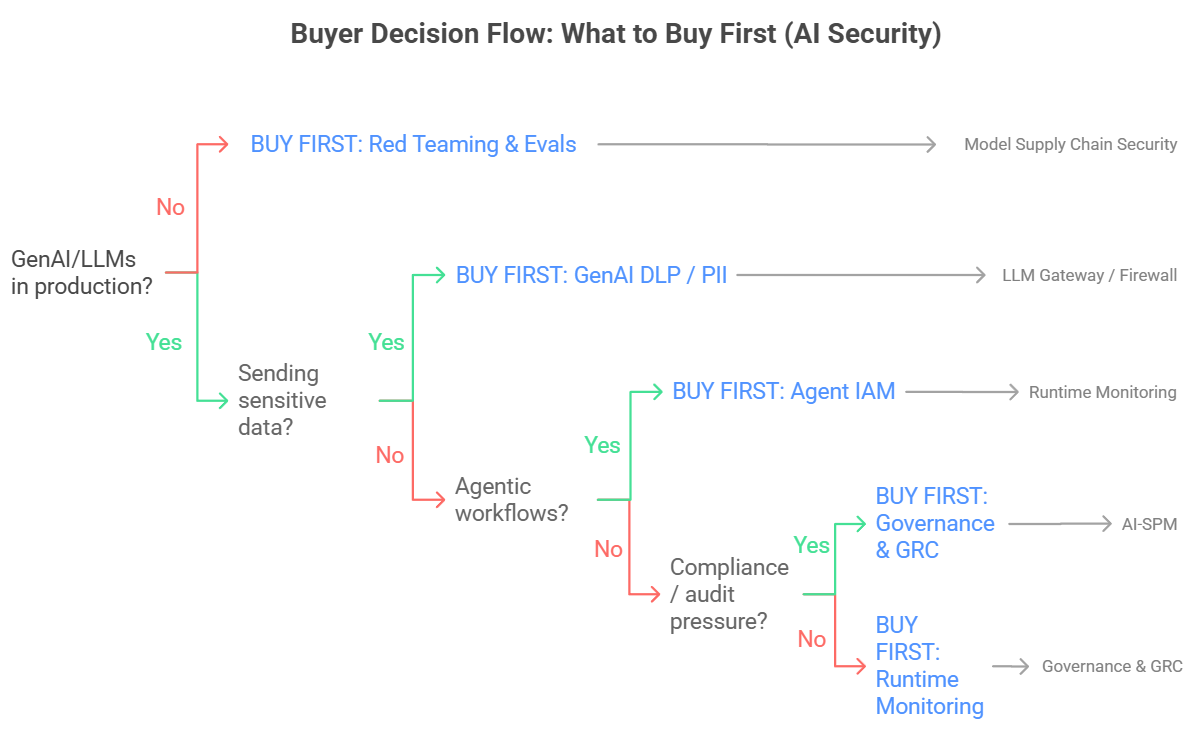

AI Security therefore refers to a suite of tools and services designed to manage these risks.

The key point: AI Security is not a single product you buy and you’re done. It is a layered set of controls that wraps around AI usage inside an organization, much like building security does not rely on one mechanism but combines keycard access, CCTV, safety systems, security staff, policies, and compliance checks. In practice, some tools span multiple layers.

For simplicity, we can break the main layers down as follows:

- Pre-deployment assurance: Tests systems before production from both security and quality perspectives, including verifying external models and artifacts before deployment.

- Runtime enforcement: Enforces policies where applications call models, for example filtering risky prompts, redacting PII, or constraining agent permissions.

- Visibility and discovery: Shows where AI is used, what data flows through it, which configurations are risky, and where exposure points exist.

- Evidence and auditability: Manages policies, records approvals, and produces an audit-ready trail for compliance reviews.

In other words, the competitive game is not just “who detects better.” It is “who owns the control points organizations rely on every day,” by centralizing workflow, policy, telemetry, and distribution in a single control plane.

Eight Categories You Need to Know

The “layers” view is the conceptual framework. For market analysis, we can map real-world solutions into eight categories, and some categories can sit within the same layer.

Taken together, these categories represent a re-assembly of the traditional security stack for the GenAI era.

- AI Red Teaming & Evals: Attack simulation and evaluation before production deployment.

- Model Supply Chain Security: Verifying the source and integrity of models, including related files and components, before use.

- LLM Firewalls / Gateways: Front-line controls that enforce security rules on prompts and responses, such as blocking risky instructions and masking sensitive data.

- GenAI DLP (Data Loss Prevention) & PII Redaction: Preventing sensitive data and PII from leaking through AI workflows.

- AI Runtime Monitoring: Real-time monitoring of model behavior during production use.

- AI-SPM (AI Security Posture Management): Discovering unsanctioned AI usage (shadow AI) and identifying risky or misconfigured settings across the organization.

- Agent Identity & Access: Managing AI agent identities and permissions to define who can do what, with auditability for after-the-fact review.

- AI Governance & GRC (Governance, Risk, Compliance): Oversight and compliance systems for policies, approvals, and audit evidence.

Before summarizing the overall picture, let’s look at the real game in each category.

Deep Dive Into Each Category: What's the Real Game

1) AI Red Teaming: Tools Are Becoming Baseline, but Expertise Still Commands a Price

This category is about proactive testing to surface vulnerabilities early, by simulating attacker behavior and running high-risk test cases before systems go into production. What’s notable today is that “basic testing tools” have become much more accessible because there are several open source options, such as NVIDIA’s Garak, Promptfoo, and Inspect from the UK AI Security Institute. As a result, the test harness itself is increasingly becoming a baseline that teams can extend on their own.

However, what still drives real outcomes is usually not the tooling alone, but the combination of: (1) the ability to design complex test suites and attack scenarios, (2) coverage of hard cases such as indirect prompt injection, agentic scenarios, and memory poisoning, and (3) services that help organizations test continuously in cycles, rather than running a one-off assessment and stopping there.

As an example on the intellectual property side, Adversa AI holds patent US11275841B2. It describes an approach to evaluating vulnerabilities in AI applications by testing “multiple defense measures in combination” against at least two different attack types (not merely small variations of prompt text), using at least one dataset. The system then adjusts to select a defense set that is more computationally efficient, such as reducing resource usage or runtime.

2) Model Supply Chain: Not Yet Standardized, but Increasingly Hard to Avoid

This category is about “checking the model before putting it into real use” by inspecting model files and related artifacts to see whether anything abnormal is present, or whether there are pathways that could allow the system to be controlled, such as hidden malicious code or backdoors.

Another critical risk is that certain file types can trigger code execution when they are loaded into a program (unsafe deserialization). Teams also need to verify the trustworthiness of the source, and the integrity and provenance of the files.

One example is HiddenLayer, which promotes the concept of an AIBOM to inventory model components and enable traceability, and claims support for multiple major model formats in its product documentation.

What is an AIBOM?

An AIBOM (AI Bill of Materials) is a “component list” for an AI model, similar to an SBOM in the software world.

It is used to build an inventory and to trace what files and components a model includes, where they came from, and what they have gone through.

Protect AI offers both an enterprise product such as Guardian and an open source tool called ModelScan. After being acquired by Palo Alto Networks, Protect AI’s work has increasingly been integrated into Palo Alto’s security platform.

Another example is JFrog, which Hugging Face has said it partners with to expand model scanning on the Hugging Face Hub and display scanning results directly on model pages.

On the Bosch AIShield side, AISpectra is positioned as a tool that scans and discovers AI assets to assess risk. Watchtower is an open source tool for scanning both model files and the notebook files used to develop models (such as Jupyter Notebooks) in the context of the AI and ML supply chain.

AIShield also states that it was listed as a Representative Vendor in Gartner’s 2025 Market Guide for AI TRiSM (AI Trust, Risk and Security Management, meaning the management of AI trustworthiness, risk, and security).

What makes this category “asymmetric in risk versus opportunity” is that if a major incident tied to models in production occurs, many organizations will rush to adopt these tools in a step-change. At the same time, efforts are emerging to push standardization around model signing and provenance verification, such as OpenSSF Model Signing and approaches based on Sigstore.

3) LLM Firewalls: From Rising Star to Commoditizing and Being Bundled

This category is the front line of LLM usage in organizations. It typically takes the form of a gateway or proxy, or an SDK or API hook that sits between applications and models. It inspects incoming prompts and outgoing responses in real time, then blocks or masks content when it detects risk, such as jailbreak attempts, policy violations, or sensitive data that should not leak.

This is one of the most crowded and competitive categories. AI-native startups sell inference protection and guardrails, while major security platforms embed similar controls into their existing suites. Open source tooling also gives teams a baseline they can extend, which puts pricing pressure on basic capabilities.

A number of M&A deals from 2024 to 2025 also clustered around this front-line layer, or broader runtime and lifecycle security positioned close to the inference boundary. That pattern suggests that, in some cases, acquiring capability can be faster than building it when large vendors want to move quickly.

Competition is rarely about whether a vendor “has a firewall.” It is about measurable production quality, including latency that does not slow systems down, detection that does not drive false positives through the roof, and integrations that fit cleanly into an organization’s existing stack.

This remains hard in production, especially for prompt injection that is not a direct user command but is hidden in documents, RAG-retrieved content, or tool outputs. In practice, robust systems focus on reducing impact and controlling blast radius, not assuming they can block every attack.

4) GenAI DLP (Data Loss Prevention) and PII Redaction: An Old Problem in a New Context

Preventing data leakage is not new. But once AI becomes a “new channel,” such as typing “commands or questions” (prompts) into GenAI tools, or granting Copilot (the AI assistant in Microsoft 365) access to workplace content, DLP must expand its scope to directly govern AI usage, not just email or files in the traditional way.

A key advantage for large incumbents is that they already have policies and data sensitivity labels in place, so they can enforce rules within existing enterprise workflows quickly. For example, Microsoft Purview DLP can define conditions that restrict the use of sensitive data in Copilot, both at the prompt level and at the level of labeled files and emails.

Startups that have a chance to create incremental value typically need clear differentiation, such as multilingual capability (Private AI states it supports around 50 languages and continues to add more), or approaches like a data privacy vault (segregated storage for sensitive data) and tokenization (replacing real data with tokens). For instance, Skyflow helps limit the spread of sensitive data without relying only on inline scanning.

On the other side, open source tools that teams can build on, such as Microsoft Presidio for PII detection and anonymization, further increase pricing pressure on baseline functionality in this category.

5) AI Runtime Monitoring: Starting with Observability But Ending Up at Security

This category involves tracking LLM system behavior during actual operation, such as monitoring latency and errors, watching token usage to estimate cost per request, collecting traces/logs for root cause analysis, and doing quality checks or some forms of evaluation to watch for issues like drift and quality signals related to hallucination.

The overall picture of this category has many usable open source options like Phoenix, Langfuse, and Helicone that teams can self-host and extend. At the same time, major observability platforms have already released LLM-specific capabilities, such as Datadog LLM Observability and Splunk's Observability for AI approach.

The competitive question is: if what organizations truly need is just traces and logs, how can AI-native players compete with existing platforms customers already have?

The differentiator that can create pull is typically systematizing evaluation and tying it to release processes, such as having regression history, organization-specific evaluation sets, and quality gates in CI/CD or release workflows to ensure "no pass, no release" so that observability evolves into a real security control, not just a retrospective viewing tool.

6) AI-SPM (AI Security Posture Management): Growing Fast, but at Risk of Becoming “Just a Feature”

AI-SPM is about making the organization’s AI footprint visible, so you can see where AI is being used and track the risks that come with it. Typical risks include shadow AI (AI usage that sits outside formal governance), misconfigurations, and exposure created by overly broad integrations or permissions. The concept is highlights close to CSPM (Cloud Security Posture Management, which focuses on cloud misconfiguration risk), but with the focus shifted specifically to AI systems and AI-related resources.

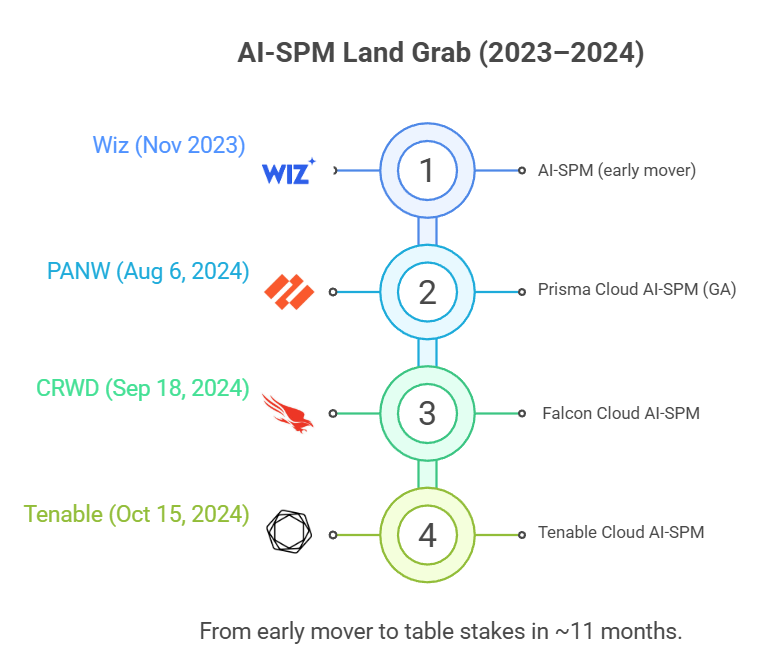

On the vendor side, Wiz launched AI-SPM as early as November 16, 2023. After that, large platforms began to follow. Examples include Palo Alto Networks’ Prisma Cloud, which announced AI-SPM as GA (Generally Available) on August 6, 2024, as well as CrowdStrike (September 18, 2024) and Tenable (October 15, 2024).

Mini-timeline: Wiz (Nov 2023) → Prisma Cloud GA (Aug 2024) → CrowdStrike (Sep 2024) → Tenable (Oct 2024)

2025 signal: The trend is becoming clearer that AI-SPM does not stop at configuration scanning. It is being extended toward runtime risk control, especially as organizations begin deploying more AI agents.

A key strategic question is what happens as more large platforms add this capability into CNAPP (a comprehensive cloud security platform). AI-SPM may increasingly be viewed as a “must-have feature” rather than a standalone product. This is good for buyers, who get more value inside existing bundles, but it puts pressure on companies trying to sell AI-SPM as a single-product business.

What does GA mean?

GA (Generally Available) means a feature is released for general customer use, not a preview or beta.

7) Agent Identity and Access: A Market with Plenty of Open Space

As AI agents start doing work on behalf of humans in tasks that “touch real systems,” such as booking tickets, sending emails, approving requests, or pulling data from databases, the security question shifts from “Did it answer correctly?” to “Is it allowed to do that?” and “If damage happens, can we trace what happened and why?”

In practice, “agent identity” usually means making three things explicit:

- Who this agent is (identity, origin, credentials)

- What the agent is allowed to do (authorization, scope, policy)

- Whether actions can be audited after the fact (audit trail, attribution)

The market today does not look like traditional IAM, where clear category leaders exist. Instead, it is splitting into two main forces.

- The legacy IAM and PAM camp (identity and access management, including privileged access controls), such as Okta, Ping Identity, and CyberArk. These players can extend existing systems to support “non-human identities” such as bots or AI agents, and to enable delegated access with tight scope and after-the-fact auditability.

Terminology boxIAM (Identity and Access Management): Managing identities and permissions, who is who and what they can accessPAM (Privileged Access Management): Controlling high-privilege admin-level access that can perform high-impact actionsNon-human identity: Identities that are not humans, such as service accounts, bots, workloads, and AI agentsDelegated access: Granting permission to act on someone’s behalf within constraints, such as limiting tasks, time, or data, with approval

- The “agent stack” and “policy-as-code” camp. These are the toolchains for building and running agents, plus code-driven authorization systems. They are expanding into agent permissioning and approval workflows, for example Strata Identity (identity orchestration) and Permit.io (authorization-as-code). The LangChain ecosystem has also accelerated real-world use of the “agent + tools” pattern.

Terminology boxAgent stack: Tooling to build, run, and connect agents to tools and enterprise systemsPolicy-as-code: Writing authorization rules and approval conditions as code for reuse and auditabilityIdentity orchestration: Coordinating and connecting multiple identity sources so they work together (for example HR, IAM, and different applications)Authorization-as-code: Defining what is allowed or not allowed as code (permissions, scopes) instead of manual configuration in each system

A key catalyst making this category more important is Anthropic’s Model Context Protocol (MCP), a common standard for connecting agents to tools and enterprise systems in a consistent format. MCP was launched on November 25, 2024, and was transferred for continued development as a standard under the Linux Foundation’s Agentic AI Foundation (AAIF) on December 9, 2025.

Anthropic has stated that the MCP ecosystem has more than 10,000 MCP servers, and that total SDK downloads are at the 97M+ per month level.

The structural impact is that as connecting agents to tools becomes easier and more widespread, agents will touch real systems more often. That elevates “what actions are allowed,” “what scope is permitted,” and “the ability to trace who did what using which model” from a nice-to-have into a requirement.

Terminology boxMCP: A standard for connecting agents to tools and enterprise systemsAAIF: A Linux Foundation initiative that maintains MCP as a standardSDK: Developer tooling and packages for implementing a standardMCP server: A connector that lets an agent invoke a tool, not a physical server machine

If agentic AI truly reaches production at scale in large enterprises, “agent permissions” will become as foundational as the IAM and PAM systems organizations already rely on. But if agent use remains limited to pilots or proofs of concept, the market will grow more slowly than expected, and buying behavior will likely trend toward incremental feature additions inside existing platforms rather than purchasing an entirely new standalone product.

8) AI Governance & GRC: Win with Evidence, Not Demos

This category is typically driven by GRC, Legal, Risk, the CDO function, or Responsible AI teams more than pure security teams, because the main challenge is making AI usage operationally controllable and audit-ready through documentation, evidence, and approval workflows, not just showing good demos.

Tools that tend to win are the ones that become the organization’s system of record: a complete AI inventory, where each use case is tied to data types, owners, approvals, criteria, and a clear audit trail that can be produced quickly for auditors or regulators.

For example, Credo AI offers Policy Packs that translate laws and standards into checklists and actionable steps teams can follow. ModelOp positions itself as an AI system of record that helps large organizations manage governance continuously from a single control point, especially when AI use cases are spread across teams and systems.

The risk in this category is “dashboard-only” adoption. If governance does not connect to real enforcement, it becomes shelfware because teams experience it as paperwork rather than real risk control. What tends to work is tying governance to decision points, such as approval gates before production, access controls over who can use which data, and quality gates in pipelines that enforce “pass to proceed,” not just retrospective recording.

A major tailwind is compliance pressure, especially from the EU AI Act. Many organizations increasingly need to answer questions like what AI they use, what data it touches, who is responsible, and what controls are in place. The timeline is phased, with 2 August 2026 as a major milestone when the Act broadly becomes applicable, though details vary by role and system type. There are also ongoing proposals and discussions that could adjust parts of the timeline, but changes would require formal amendments.

EU AI Act (Quick Summary)

The EU AI Act is European Union legislation establishing a risk-based framework for developing and deploying AI, with stronger obligations for higher-risk systems and certain general-purpose AI models.

The timeline is phased. A major milestone is August 2, 2026, when the Act generally becomes applicable, while some provisions apply earlier or later depending on the obligation and the role in the value chain.

Three Forces Shaping the Market’s Direction

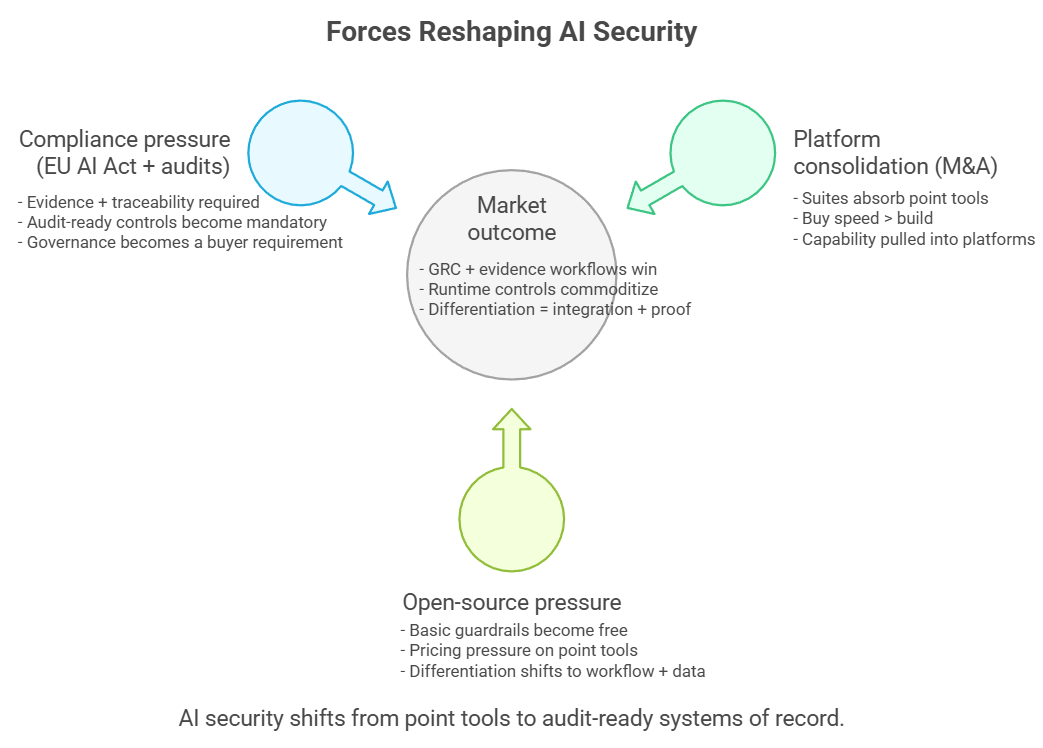

After looking across all eight categories, a clearer picture emerges. This market is not driven by features alone. It is being pulled in a single direction by three major forces.

1) A strong M&A wave is pulling the market toward large platforms

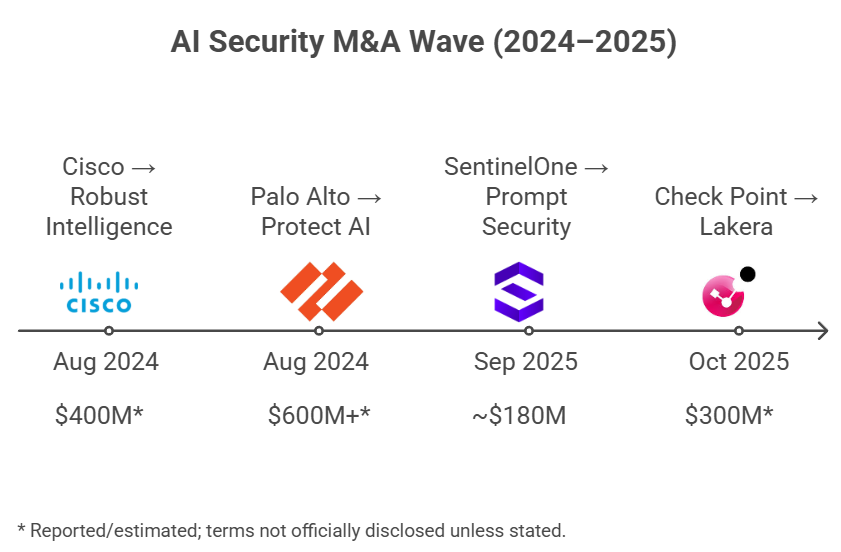

In the AI Security market during 2024 to 2025, there were four major acquisitions that are “easy to see clearly.” In terms of deal status, these are described as closed deals. Some have direct confirmation from company announcements, while others rely on reports and financial media sources.

- Cisco acquires Robust Intelligence

Cisco stated that it closed the acquisition of Robust Intelligence in September 2024. Media reports estimated the deal value at around $400 million, and Cisco has been clear that Robust’s technology is a key part of Cisco AI Defense. - Palo Alto Networks acquires Protect AI

Palo Alto Networks stated that it closed the deal on July 22, 2025. Reports have placed the deal value at $600 million or more, and the company has communicated that Protect AI’s team and technology will become a key component of Prisma AIRS. - Check Point acquires Lakera

Check Point announced the acquisition of Lakera in September 2025, along with a plan to establish a “Global Center of Excellence for AI Security.” External reports have stated that the deal closed on October 22, 2025, and have estimated the deal value at around $300 million. - SentinelOne acquires Prompt Security

SentinelOne closed the Prompt Security acquisition on September 5, 2025 (supported by SEC filings). Reports estimated the deal value at around $180 million. The stated direction is to integrate the capability into the Singularity platform to strengthen the company’s GenAI security strategy.

A notable common pattern

All four acquirers are incumbent security vendors. What they bought is largely concentrated in the runtime layer of GenAI, such as gateways and firewalls at the model input and output boundary, model supply chain checks before use, or capabilities that help control risk while systems are running in production. The picture this suggests is that large vendors often chose to “buy to keep up” rather than build everything in-house in the short term.

Questions worth asking after the deal closes

Even when a deal is closed, PR and what customers can actually use are not always the same thing. The questions to clarify are: “Is this GA yet?”, “Which product includes it, and which module must be enabled?”, and “How deeply is it integrated with the existing platform?” In the end, what matters is what works in practice, not what looks good in a press release.

2) Open Source Is Pressuring Prices Across Almost Every Layer

Across almost every layer of AI security today, there are open source tools that teams can use directly or extend. This pushes down the price of “baseline functionality” very quickly. A frequently cited example is LLM Guard, which has been reported to have download counts on Hugging Face at the million-per-month level. But those numbers should be interpreted carefully. Hugging Face download statistics are based on file requests to the server (such as HTTP GET or HEAD), so they are a coarse proxy for usage and do not map one-to-one to real unique users.

There are also open source projects that many developers already know well, such as NeMo Guardrails, promptfoo, garak, and Microsoft Presidio. Together they make it increasingly possible to get started with guardrails, evals, redaction, or testing at near-zero cost.

The implication is that companies that can charge meaningful revenue typically need to sell what open source does not provide. Examples include enterprise-grade workflows, policy enforcement that truly integrates with identity and approval systems, auditable telemetry and logging, datasets and evaluations accumulated from real production usage, or deployment and operational packaging that measurably speeds teams up.

3) The Market Is Starting to Speak a Common Language, Just as Compliance Deadlines Start Closing In

OWASP LLM Top 10 is being referenced more and more, to the point that it is starting to function as a “shared language” for discussing LLM risks between buyers and vendors. Once both sides align on the same framework, the buying question shifts from “Does the demo look good?” to “Can you explain how you control risk against this framework, and what evidence can you produce?”

Once a standard framework becomes the common language, the next unavoidable topic is regulatory deadlines. The name most frequently discussed right now is the EU AI Act. But the Act does not take effect all at once. It rolls out in phases. August 2, 2026 is a major milestone by which many organizations need to have systems that are genuinely operational and auditable. At the same time, some provisions start earlier in 2025, some obligations are deferred to 2027, and certain cases have transition periods extending to 2030, depending on the system category and the deployment context.

One additional factor to watch is that there are currently ideas and draft proposals from the European Commission, often referred to in the media under the umbrella term Digital Omnibus. These have been reported as potentially affecting the timeline for parts of the obligations, especially for high-risk systems. This is not settled yet, and the outcome depends on how negotiations and approvals proceed.

The practical takeaway is that many organizations should treat 2026 as the main readiness deadline, but when communicating internally it is more accurate to describe it as a phased rollout. That framing also helps prevent the common misunderstanding that “everything must be compliant on a single day.”

Two Questions Buyers Will Ask Much More Often in 2026

Beyond the eight categories and the three major forces, there are two additional topics that will likely come up more frequently in 2026 because they directly affect both budgets and deployment models.

1) The Cost of Evaluation and Testing (Evals and Red Teaming) Will Become an Unavoidable “Real Bill”

As organizations begin doing AI red teaming and evals seriously at an enterprise level, the question will shift from “Can we test?” to “How much does testing cost?” Large-scale testing translates directly into inference cost. That includes repeated runs, testing multiple models, multiple versions, multiple datasets, and rerunning regressions after fixes.

What buyers will start pressing on is “ROI” and “budget control,” for example:

- How do we test deeply enough without API spend or GPU cost spiking out of control?

- How do we focus testing on the highest-risk areas instead of treating every test case equally?

- How do we tie test results to real release decisions, in a “no pass, no release” way, without making the pipeline too slow to tolerate?

This will become a key differentiator for services and platforms that look more “enterprise-grade,” because they are not only selling security. They are selling security that can be delivered with predictable cost.

Quick takeaway

In 2026, evals and red teaming will become ongoing operational work. It will need to run in cycles with budget controls, not only after incidents when teams scramble to patch gaps.

2) The Sovereign AI Push, and On-Prem or Air-Gapped Deployments, Will Make “Data Must Stay Inside” a Louder Requirement

This trend will be especially visible in organizations with heavy regulation and sensitive data, such as government agencies, financial institutions, industries handling highly sensitive information, or enterprises that are increasingly shifting toward using internal models.

As usage patterns move toward local LLMs and on-prem deployments, buyers will start asking the same set of questions more often:

- Can the security tooling still work if data is not allowed to be sent to the vendor’s cloud for inspection?

- Does it support air-gapped environments, and can it retain logs and evidence fully inside the organization’s systems?

- If endpoints are not connected to the internet all the time, how can policies and threat intelligence be updated in a timely way, and can updates be managed with proper change control?

The result is that vendors who design for multiple deployment modes, and who can operate reliably in environments where “data must stay inside,” will gain an advantage, especially in large enterprise deals.

Terminology boxSovereign AI: The idea that an organization or country wants greater control over models, data, and infrastructureOn-prem: Deployed and run in the organization’s own data center or systemsAir-gapped: Network-isolated, with no internet connection or very tightly restricted connectivity

These two topics will accelerate a clearer market split between vendors that merely look good in demos and vendors that can be deployed in real environments. Real deployments must address both budget realities and strict operational constraints.

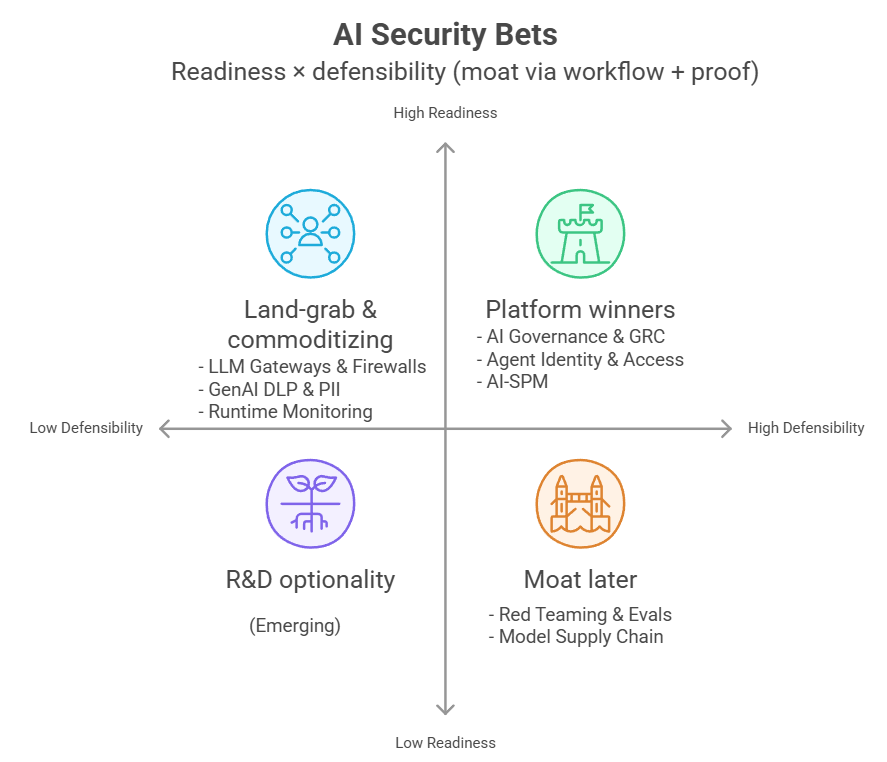

Investor Lens: Four Assumptions to Place Bets On

Bet 1: Model Supply Chain Is the AI-Era Version of Software Supply Chain Security

Put simply, the supply chain risks we used to talk about in traditional software are moving into “model files” and the artifacts around them, such as weights, tokenizers, configs, notebooks, and pipeline artifacts.

Why it’s worth betting on

This category is attractive from an investment perspective because there are still relatively few teams doing it seriously, but the problem is hard. The challenge is not just “virus scanning” for files. It involves AI-specific risks such as:

- Unsafe code paths introduced through deserialization or loading certain artifacts

- Backdoors embedded in model weights

- Supply chain contamination across the model lifecycle, such as pulling from external sources or passing through multiple hands

On top of that, regulation is increasingly pushing organizations to produce documentation and after-the-fact auditability, especially for systems that may fall under high-risk obligations in the EU AI Act. That creates an additional compliance-driven tailwind.

And if a major supply chain compromise event occurs in the future at meaningful scale, the market could be forced into rapid adoption, similar to how organizations began taking software supply chain security seriously after major incidents.

What to watch out for

If most organizations consume models through APIs rather than downloading and running model files themselves, the risk surface on the “model file” side may be smaller than expected. Platforms like Hugging Face are also expanding scanning on the Hub over time, so for some use cases this may be “good enough” without buying additional tooling.

But that does not mean the category disappears. Organizations still need to handle what sits outside the Hub, such as private models, internal registries, and policy enforcement inside their own pipelines.

Bet 2: Agent Identity Could Become the Central Control Plane for Agent Permissions Across the Enterprise

Why it’s worth betting on

When the way systems are built and used changes, identity and permissions usually have to evolve with it. The core question always comes back to the same point: “Who can do what, and how far?” As organizations use agents to perform more work on behalf of humans, that question becomes unavoidable. It is no longer only about human logins. It also includes delegated permissions that can chain across multiple steps, for example a person delegates to an agent, the agent delegates to sub-agents, and those agents invoke tools or act inside real enterprise systems.

Another catalyst is MCP (Model Context Protocol). Anthropic released it as an open specification in late 2024, and it was later moved for continued development under the Linux Foundation’s AAIF initiative. This has started to standardize how agents connect to tools and enterprise systems. As connectivity becomes easier and more widespread, agents will be used more often in real operational environments. That raises permissioning, scope control, and after-the-fact traceability from a nice-to-have to something closer to a requirement.

What to watch out for

- Incumbent IAM vendors such as Okta or Ping Identity can extend existing products to support agents quickly. With existing bundles and existing sales channels, startups focused only on agent identity may face pressure on both pricing and distribution.

- If over the next two to three years agents are still not widely deployed in production, the market may grow more slowly than expected. Buying behavior may lean toward incremental feature additions inside existing platforms rather than decisions to purchase a standalone new product.

Bet 3: The EU AI Act Compliance Wave Is Likely to Pull the Market Toward GRC

Why it’s worth betting on

The EU AI Act takes effect in phases, and August 2, 2026 is an important date because many requirements start applying more broadly. That forces in-scope organizations to build stronger documentation, governance processes, and evidence that is auditable after the fact, rather than simply “passing a demo” or relying on informal judgment.

Once the game becomes “you must be able to answer what AI you use, where it is used, who approved it, who is accountable, and what evidence supports it,” GRC-style tools benefit. They are built for approval workflows, decision records, and audit trails.

What to watch out for

Demand will not appear equally across all industries and countries. History, including GDPR, suggests that early interpretation and enforcement intensity can be uneven, causing some organizations to delay investment or do only the minimum at first.

Another factor is that organizations’ existing GRC platforms may extend to include AI governance modules. That means newer players must win by being operationally useful in day-to-day work, not by having attractive dashboards alone.

Terminology boxGRC: Governance, Risk, Compliance, organizational systems for oversight, risk management, and regulatory complianceGDPR: The EU’s personal data protection law (effective 2018), often cited as an example where early enforcement and interpretation can be inconsistent

Bet 4: Indirect Prompt Injection Specialists Have Technical Advantages That Are Hard to Copy

Why it’s worth betting on

Many solutions in the LLM firewall or gateway category start by blocking “dangerous commands users type directly,” because those are easier to detect and measure. Indirect prompt injection is a different class of problem. The instruction is hidden inside “data the model reads,” such as attached documents, RAG-retrieved content, or tool outputs. That makes it much easier for the system to confuse “information” with “instructions.”

As organizations use more RAG and more tool-calling agents, the layers of external data flowing into the model’s context become thicker. As a result, the attack surface for this type of injection expands as well.

Who to watch

Some vendors position themselves clearly around this risk area, such as PromptArmor, which emphasizes indirect prompt injection and threat intelligence as core themes, or Adversa AI, which consistently produces content and services focused on prompt injection.

What to watch out for

If Anthropic or OpenAI significantly strengthen model-level separation of instruction priority, such as instruction hierarchy concepts, demand for some external tooling could decrease.

On the other hand, we may still lack strong public numbers showing how frequently indirect injection happens in real production. That makes some organizations view it as “hard to visualize,” and they may delay purchasing until there is a nearby incident or a concrete internal case.

Detection in this space requires deeper understanding of semantic context than simple pattern matching. It often demands high-quality models and datasets with real examples, which are slow to develop and difficult to accumulate.

Signals to Watch

If you want to see where the AI Security market is heading, watch for these three types of signals.

1) Signs the market is starting to consolidate into platforms

You increasingly see certain capabilities (such as AI-SPM) being added as part of large security platforms, and many vendors are trying to centralize control across multiple layers in a single place to reduce the burden of stitching many tools together.

2) Signs the market is starting to use the same framework

Some procurement documents are beginning to reference frameworks like OWASP LLM Top 10 in their questions or requirements. More buyers are also starting to ask for “evidence that can be audited after the fact,” rather than relying on impressive demos alone.

3) Signs AI risk is being discussed at the executive level

AI security and privacy incidents are appearing more frequently, pushing organizations to see this as not only a technical team problem, but an enterprise-level risk that needs clear accountability and supporting evidence. At the same time, the EU AI Act has high penalty ceilings, which is another force pushing many organizations to take governance and evidence systems more seriously.

If You Had to Place Money Next Week

Three Categories Worth Betting On

- AI Governance and GRC

The main driver is regulatory and audit pressure, especially from the EU AI Act. Many organizations will need to build documentation systems, approval workflows, and evidence that can be audited after the fact more seriously during 2026. This category does not win on demos. It wins on operational systems that let Legal, Risk, and accountable owners do real work day to day. - Model Supply Chain Security

This is not something every organization buys immediately, but the problem becomes unavoidable as external model usage increases. The value comes from validating the trustworthiness of models and related artifacts, and tying controls into the organization’s registry or pipeline. If a major production incident tied to model supply chain compromise happens, the market could accelerate sharply. - Services-led AI Red Teaming

Basic testing tools are increasingly available for free, but what still has durable value is expertise and continuous repetition. That includes designing scenarios aligned with the business, testing indirect prompt injection, testing real tool-calling agent cases, and helping teams close gaps with measurable outcomes. In many enterprises, services-led revenue is harder to compress than selling tooling alone.

Two Categories to Treat with Extra Caution

- LLM Firewalls Without Real Differentiation

This market is crowded, and baseline features look very similar across vendors. There are also open source options, and large platforms are already bundling these controls into products customers are already buying. If the only selling point is “we have a firewall,” you will struggle on both pricing and distribution. - Eval Harnesses That Are Only Test Runners

If a product is only a test runner, without proprietary datasets, without workflows that fit how teams actually work, and without tying test results into real release gating, it is hard to monetize. Teams can increasingly assemble similar capability themselves from open source.

Decision Checklist (The Questions Buyers Should Actually Ask)

- “Production-ready” claims: How production-ready is it today? Are real customers already using it?

- Indirect prompt injection coverage: How well does it handle indirect prompt injection? Are there test results or explainable cases?

- Deployment model: Is it a proxy in front of systems, an SDK embedded into the app, or an add-on inside work tools? Each option affects teams and rollout timelines differently.

- Enterprise integration: Can it integrate with enterprise systems such as user permissions, approval workflows, logs and audit trails, and incident tracking, or is it a standalone tool?

- Open source strategy: If baseline functionality is available for free, where exactly is the differentiated value?

Conclusion

The AI Security market is increasingly flowing toward platform consolidation, especially in layers that sit close to real usage, such as front-line proxy and gateway layers, data loss prevention (DLP), AI posture management (AI-SPM), and governance layers that manage policies and audit-ready evidence. This dynamic favors large incumbents with existing customer bases and established sales channels, because they can add features into existing bundles, sell more easily, and immediately leverage data from systems customers already run.

At the same time, meaningful “gaps” still exist in areas where the market has not settled. Examples include agent identity and permissions, where there is still no clear standard operating model; model supply chain security, which many organizations have not purchased seriously yet but has strong reasons to grow as external model usage increases; and indirect prompt injection, which remains a blind spot for many teams because it does not arrive as a direct user prompt.

To understand where the market is really heading, do not look only at who sits in which category. Look for who is capturing the “daily control points” that work must pass through, such as enforcement points with real policy control, complete logs and evidence, and tight links to enterprise approval processes. Those points often create high switching costs and durable long-term advantage.

Data as of early February 2026

EU AI Act timeline references Regulation 2024/1689 Article 113

Note

This report is AI-assisted — I acted as the project lead, with AI as my assistant. I’m responsible for the report’s overall direction and for the accuracy of the content.

It took about two days to produce (including all the diagrams). In the past, researching, synthesizing, and analyzing the material to this level would have taken weeks. AI turned out to be an excellent time-saver.

Workflow

- I asked Claude and ChatGPT to gather initial background information.

- I had them take turns fact-checking: for each claim, they searched and provided citations, and I manually checked where sources conflicted or contradicted each other.

- In this step, I instructed ChatGPT to be especially “nitpicky,” which inevitably created lots of false positives (flagging things that weren’t actually wrong). Even so, it helped surface risk areas quickly.

- I set the outline and gave clear guidance on the intended structure, perspective, and the kind of output I wanted.

- ChatGPT produced Draft 1 → Claude revised it to improve the overall structure and normalize the tone and language level first, so later line-by-line edits had a consistent baseline.

- I reviewed each section, clarified what role it should play and what message it needed to convey, then asked ChatGPT to revise accordingly.

- I reviewed paragraph by paragraph, requesting additional explanation where needed; ChatGPT produced 3–5 options to choose from.

- I reviewed sentence by sentence to eliminate awkward phrasing (e.g., overly literal translations), asking ChatGPT to propose 10–12 alternatives for problematic lines.

- I translated the report into English by having ChatGPT translate section by section, then I read through and checked that it worked.

- I had ChatGPT generate the text that would go into the diagrams, then placed it into Napkin AI templates (I’m picky—small details affect what the visuals communicate, and I don’t usually like fully automatic outputs).

Bonus: one of the raw AI research files Claude produced as an initial starting point.

A vendor analysis mapping 30+ AI Security companies across 8 categories with detailed profiles (pricing, deployment, tech approach) plus actionable buyer workflows and investment frameworks.

Please verify before using ⚠️ it’s unedited AI output and may contain errors, omissions, or outdated details.

Translated by GPT-5.2