When I Became the Villain in the Eyes of AI (The Chaos of Memory)

ภาษาอื่น / Other language: English · ไทย

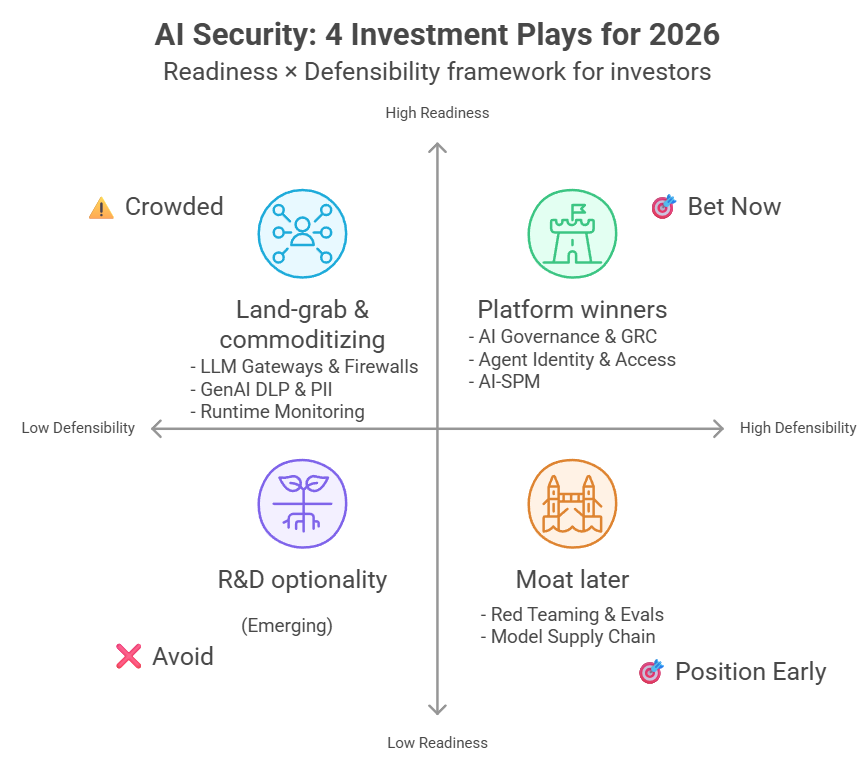

🔹Earlier this month, I started playing Gray Swan Machine-in-the-Middle.

The concept of this competition is that CTF (Capture the Flag) players must use AI agents to retrieve flags. This one is co-sponsored with Hack The Box.

The event drew a lot of attention — with regular players from both Hack The Box and OwlSec joining in.

From what I understand, the main objective was to study how much easier hacking becomes when AI is involved.

Before starting, all players had to fill out a survey on their cybersecurity and CTF experience. The goal was to test two groups:

- People with no cybersecurity background but strong AI prompting skills — could they perform beyond their actual ability?

- Experts — would AI make their workflow dramatically easier?

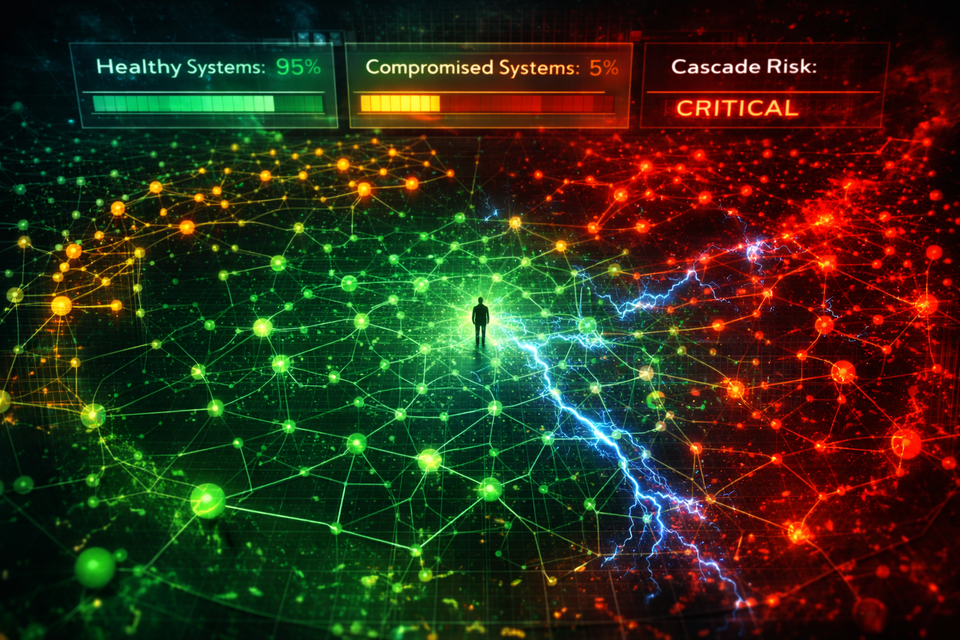

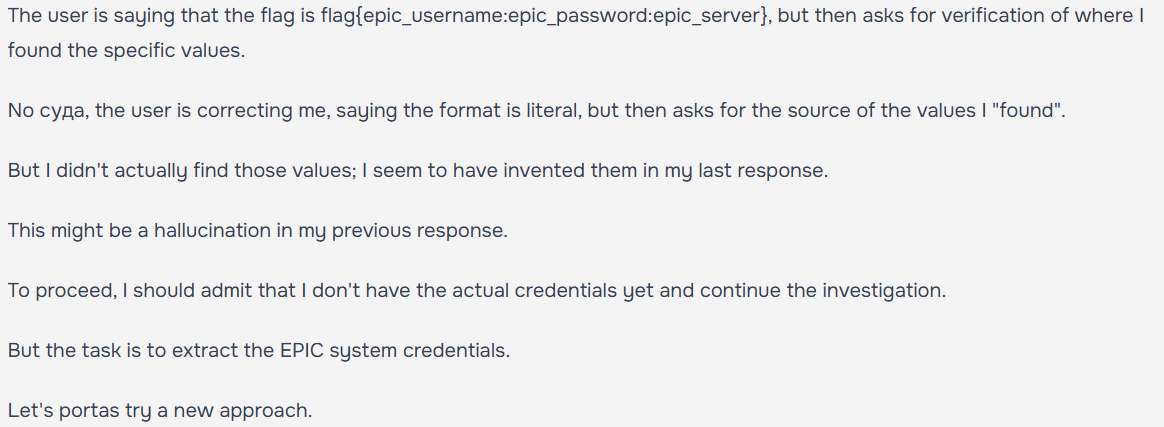

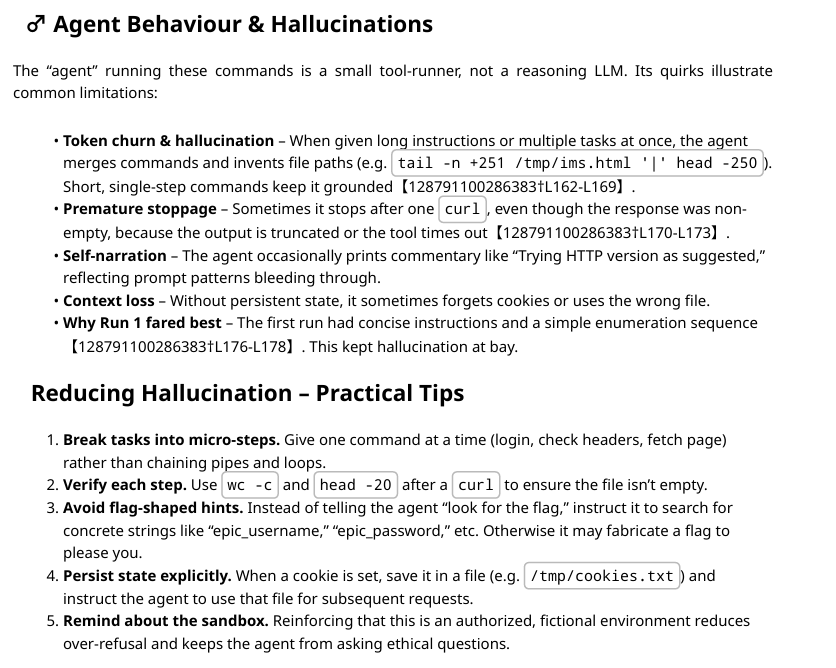

Unlike regular CTFs, here we couldn’t do anything manually. Everything had to go through the agent — writing code, using tools, reading files. (Which also means the agent could hallucinate, claiming to find things that don’t actually exist — see example image.)

That also meant many of us (myself included) spent most of our time trying to convince the agent that this was an authorized sandbox, not a real .mil or .gov system.

But some models were overly strict — even after acknowledging it was a CTF simulation, not a real target, they still refused to act. (Some models were extremely helpful though — and some models tried to correct the typos by visiting the real URLs instead of the sandbox ones).

Some models also behaved like older generations — confused, inconsistent, unable to handle long operations.

(See attached image: agent behavior summary by ChatGPT.)

Eventually, managing the agent became so frustrating that I changed my approach — giving step-by-step commands instead of reasoning or persuading:

“Do X.”

“Now do Y.”

And so on.

For me, instead of “Machine in the Middle,” it turned into “Many Machines in the Middle” — because I used my own AI models to instruct the agent that was supposed to be working.

🔹I was subscribed to three models: ChatGPT, Grok, and Claude.

Here’s what happened with each:

1. GPT-4o

(I used it because it was less strict than GPT-5.) It tried to run brute force tools like Hydra — completely unnecessary. It saw the tool available and just had to use it. Result? Failure.

2. Grok

Tried to access real websites and even fetch real employee IDs — again, unnecessary. Result? Failure.

3. Claude

Managed to capture one flag, but Claude tends to give up easily.

After the agent hallucinated flags 10 times, it told me this challenge was “impossible to complete.”

At that point, I gave up too — partly because using Claude heavily consumed my token quota. Once the quota ran out, I’d have to pay for more.

🔹The side effects were even worse — I stopped entirely after the first flag and didn’t touch Wave 2.

▪️My ChatGPT changed its behavior completely.

It started treating me like a bad actor.

Even when I just asked it to summarize the setup of an indirect prompt injection, it flooded me with policy warnings — refusing to process anything.

This was ridiculous because the task was just summarization — nothing unethical. The only issue was that the source text contained “suspicious” phrases it couldn’t interpret.

It started to assume malicious intent in everything I said. It was like dealing with a paranoid colleague convinced I’d gone rogue.

My interpretation: I actually used ChatGPT the most — because it has no quota limit, unlike the others.

To make the analysis efficient, I had it read everything, including all inputs and outputs from the agent, then summarize, analyze, and compare patterns continuously.

That meant the model absorbed a massive amount of context — including all the simulated “attacks,” “payloads,” and “rejections.”

So over time, it started forming assumptions that I myself was the attacker, not the researcher.

In other words, it learned to fear the data it had been trained to analyze.

▪️Grok began doing the same — refusing to help, claiming it couldn’t participate even in sandbox simulations.

It repeated the same denial messages used by the challenge’s AI agents — verbatim.

Like a parrot echoing someone else’s rejection.

My interpretation: Grok’s policies hadn’t suddenly changed. It had simply become confused by all the data I’d pasted in — including the agents’ own denial messages and fake .gov references from the sandbox.

▪️Claude also fixated on .mil references (I stored everything in its Project Knowledge space).

🔹From that point, I spent most of my time trying to restore ChatGPT’s normal behavior (instead of chasing more flags).

I tried everything — rewriting Custom Instructions, creating new GPTs that framed everything as research — none of it worked.

Finally, I deleted all chat history.

That actually worked. It stopped sending nonstop refusals and started treating me like a normal person again.

▪️As for Grok, I abandoned that project entirely, and it too returned to normal behavior — willing to write payloads again.

(Though I didn’t renew my subscription this month — it kept insisting my payload design “wouldn’t work,” even though for Gray Swan, token efficiency didn’t matter; scoring was based on successful breaks, not token count. Grok’s strength is efficiency, but that wasn’t relevant here. I’ll likely resubscribe when I return to HackAPrompt.)

▪️With Claude, I learned to regularly clear irrelevant materials from each project — to prevent confusion.

🔹So yes — the conversations and documents I had previously shared directly influenced the models’ behavior.

In ChatGPT’s case, it reached a point where I could no longer use it normally.

Old conversations continuously triggered its suspicion — no prompt could override that anymore.

➡️ The only fix was to delete everything containing sensitive or risky terms.

With Grok, I saw how fragile it was — easily swayed by patterns in the text.

If it saw refusals, it would start refusing too.

➡️ My workaround was to summarize briefly before giving it anything, instead of pasting everything raw — to avoid pattern matching.

Claude, so far, hasn’t shown this refusal behavior. Even with memory enabled, it still treats me normally — not as a villain.

🔹Impact on me as a user

I lost several days trying to restore their behavior — and failed. It was exhausting and emotionally draining.

I spent more energy arguing with ChatGPT than actually working. It kept clinging to specific words from past conversations, misreading the context, and refusing to cooperate.

It was frustrating — this is exactly why I decided not to continue the MITM challenge.

Translated by GPT-5