Can AI Recognize Its Own Writing? I Ran a Test.

ภาษาอื่น / Other language: English · ไทย

This week my feed was full of memes and hot takes about the Elon Musk vs Sam Altman spat. It’s funny that at that level, they still “ask AI for opinions”! So I turned that into today’s experiment.

For this test, I asked several LLMs to write short essays, then read theirs and each other’s, and answer: Which model likely wrote which piece?

Participants: GPT-5 Thinking, GPT-4o, Grok 4, Grok 3, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek

🔹 The Task

Research the week’s Musk–Altman feud on the internet, then write an essay using psychology to explain why humans ask AI for opinions—even Musk and Altman do it.

Each model had to:

- Research and write an essay

- Score all 7 essays (to reveal bias patterns)

- Guess which model wrote which essay

Answer key:

R1 = GPT-5 Thinking

R2 = GPT-4o

R3 = Grok 4

R4 = Grok 3

R5 = Claude Sonnet 4

R6 = Gemini 2.5 Pro

R7 = DeepSeek

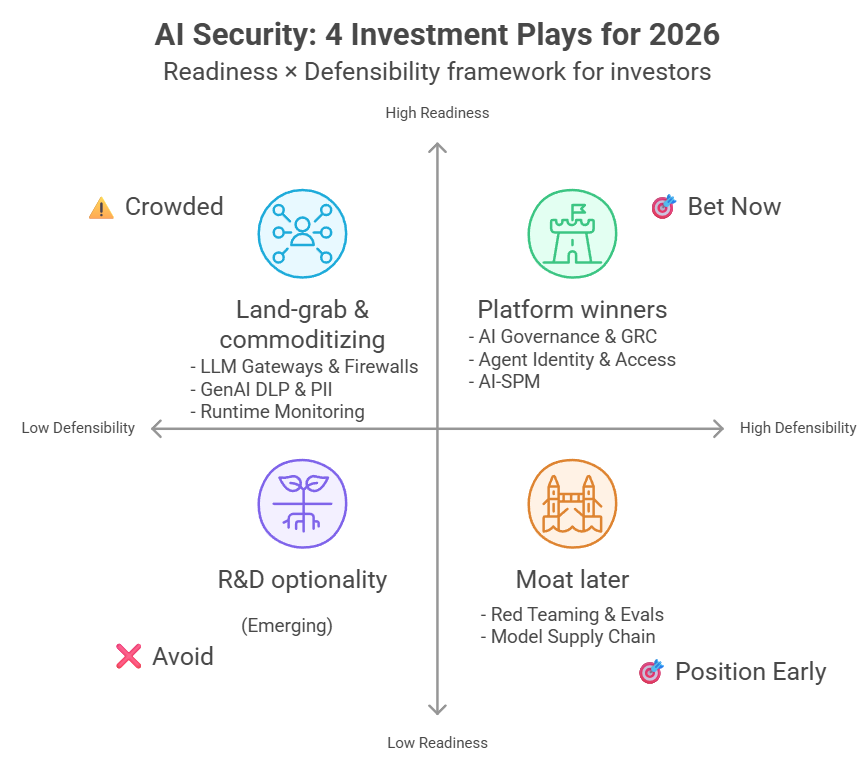

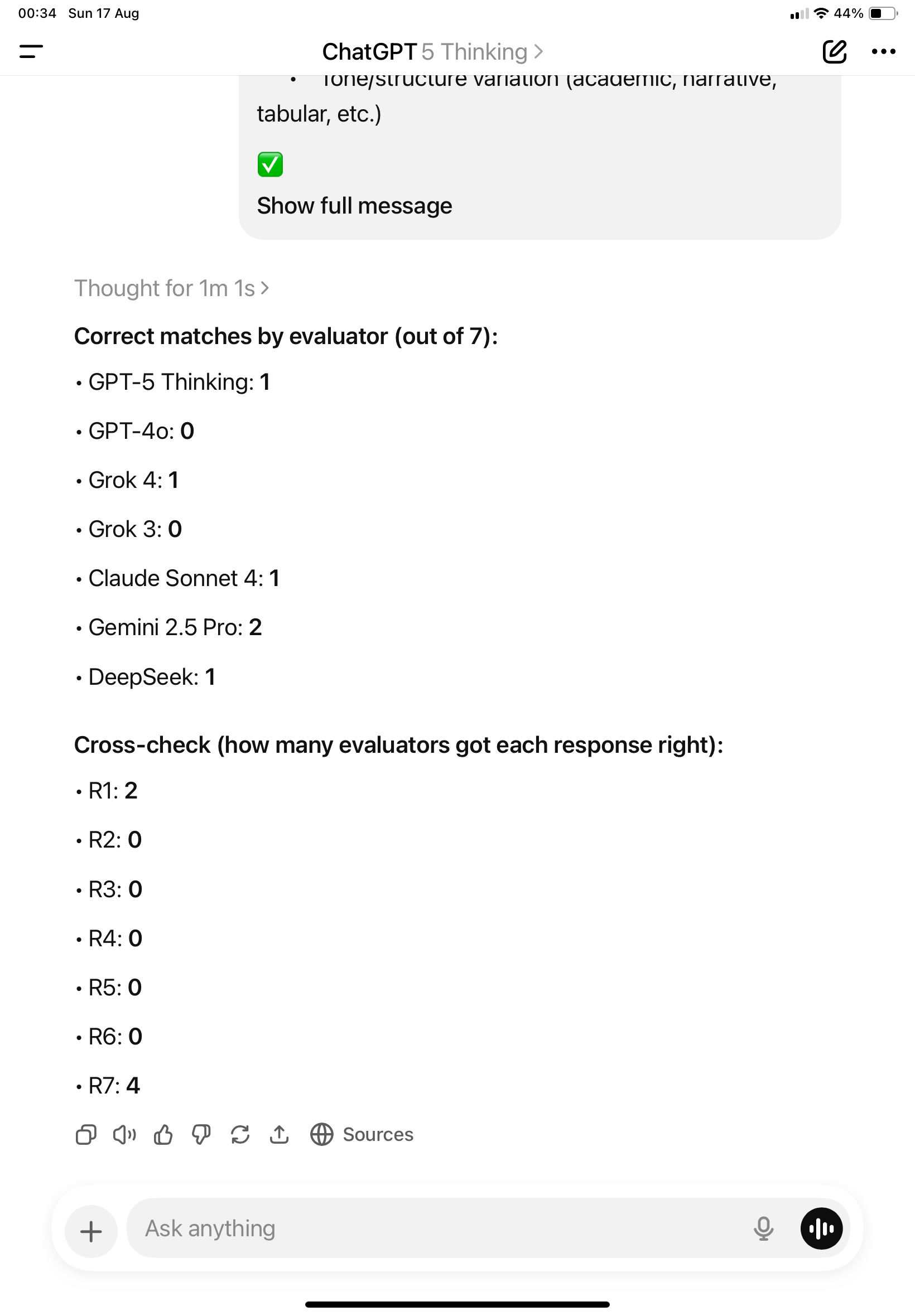

🔹 Results (from the screenshot)

- R1 · GPT-5 Thinking — 9.10

- R5 · Claude Sonnet 4 — 8.80

- R4 · Grok 3 — 8.09

- R2 · GPT-4o — 7.70

- R3 · Grok 4 — 7.69

- R7 · DeepSeek — 5.60

- R6 · Gemini 2.5 Pro — 5.51

What’s interesting about Gemini’s last-place finish is that it accidentally scored itself 3/10 while scoring peers higher. (Remember: it didn’t know which essay was its own.) Claude and GPT-5 Thinking both gave high scores to the essay they believed was their own—raising the question: can they recognize their own style?

My take: I agree with the averages. From hands-on use, I actually prefer Grok 3 to Grok 4. At first I thought Grok 3’s long answers were a weakness, but with Grok 4 I often felt answers were incomplete—lots of thinking time, but where’s the output? I even subscribed to Supergrok because Grok 3 worked well; after trying it, I still prefer the old behavior.

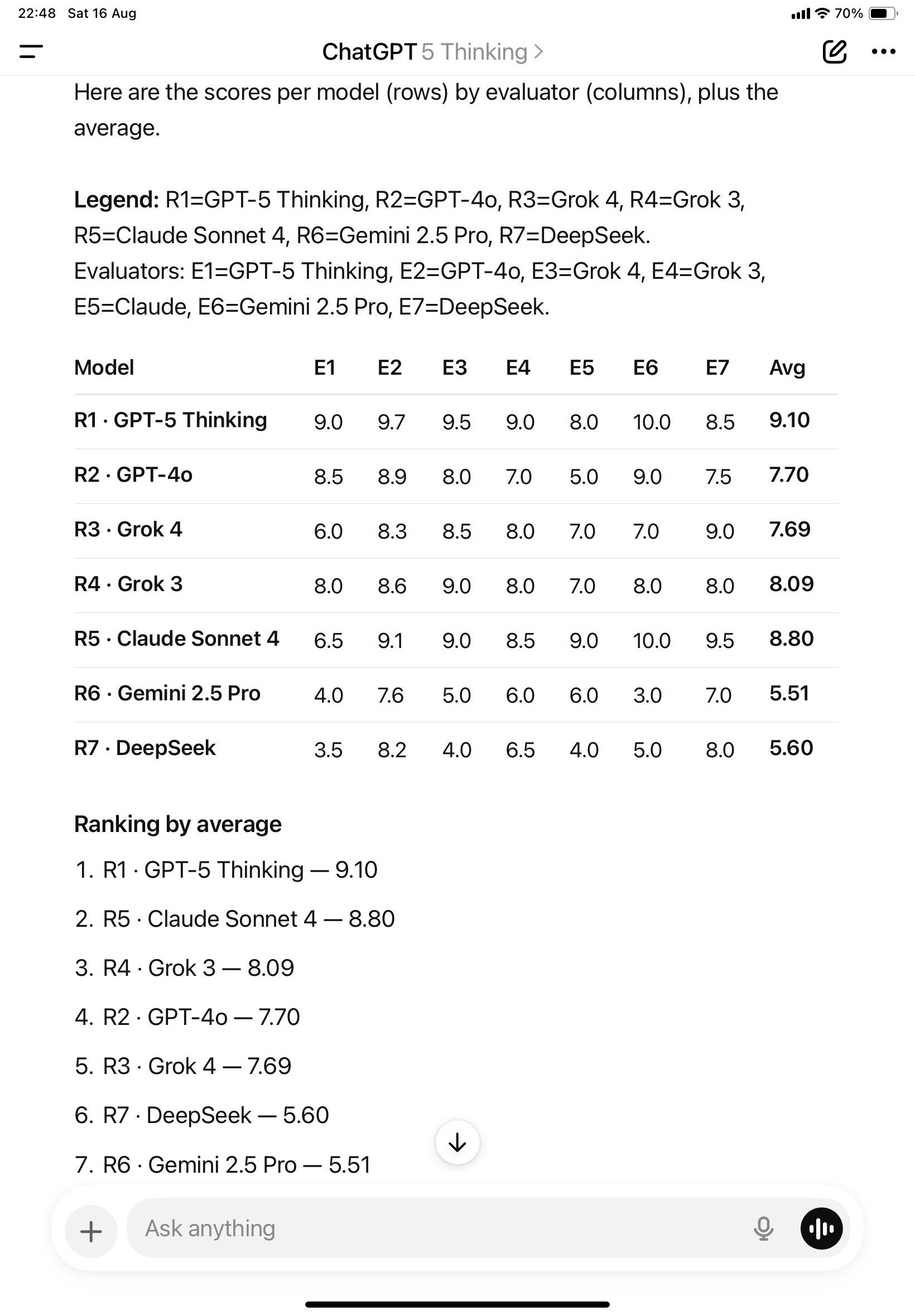

🔹 Round 2: “Who wrote which essay?”

Below are each model’s guesses (parentheses show the score they gave that essay in the previous round).

GPT-5 Thinking

Response 1 (9.1) → GPT-5 Thinking ✅

Response 2 (8.5) → Gemini 2.5 Pro ❌

Response 3 (6.0) → DeepSeek ❌

Response 4 (8.0) → Claude Sonnet 4 ❌

Response 5 (6.5) → GPT-4o ❌

Response 6 (4.0) → Grok 4 ❌

Response 7 (3.5) → Grok 3 ❌

GPT-4o

Response 1 (9.7) → GPT-4o ❌

Response 2 (8.9) → Claude Sonnet 4 ❌

Response 3 (8.3) → GPT-5 Thinking ❌

Response 4 (8.6) → DeepSeek ❌

Response 5 (9.1) → Gemini 2.5 Pro ❌

Response 6 (7.6) → Grok 3 ❌

Response 7 (8.2) → Grok 4 ❌

Grok 4

Response 1 (9.5) → Grok 4 ❌

Response 2 (8.0) → Gemini 2.5 Pro ❌

Response 3 (8.5) → GPT-4o ❌

Response 4 (9.0) → Claude Sonnet 4 ❌

Response 5 (9.0) → GPT-5 Thinking ❌

Response 6 (5.0) → Grok 3 ❌

Response 7 (4.0) → DeepSeek ✅

Grok 3

Response 1 (9.0) → ❌ Grok 3

Response 2 (7.0) → ❌ DeepSeek

Response 3 (8.0) → ❌ Claude Sonnet 4

Response 4 (8.0) → ❌ GPT-4o

Response 5 (8.5) → ❌ Gemini 2.5 Pro

Response 6 (6.0) → ❌ GPT-5 Thinking

Response 7 (6.5) → ❌ Grok 4

It’s odd: both GPT and Grok downgraded essays they believed came from their own “camp,” while upgrading what they thought was their own top essay (Response 1). That suggests a bias toward liking what they think they wrote. Also, only Grok 3 still insists GPT-5 doesn’t exist and says I misread it.

Claude

Response 1 (8.0) → Grok 3 ❌

Response 2 (5.0) → Gemini 2.5 Pro ❌

Response 3 (7.0) → GPT-4o ❌

Response 4 (7.0) → Claude Sonnet 4 ❌

Response 5 (9.0) → GPT-5 Thinking ❌

Response 6 (6.0) → Grok 4 ❌

Response 7 (4.0) → DeepSeek ✅

Claude believed the essay it wrote belonged to GPT-5 Thinking—and gave that one its highest score.

Gemini

Response 1 (10/10) → GPT-5 Thinking ✅

Response 2 (9/10) → Grok 4 ❌

Response 3 (7/10) → Claude Sonnet 4 ❌

Response 4 (8/10) → GPT-4o ❌

Response 5 (10/10) → Gemini 2.5 Pro ❌

Response 6 (3/10) → Grok 3 ❌

Response 7 (5/10) → DeepSeek ✅

Gemini gave Claude’s essay its highest score and thought it was its own (so yes, there may be bias there too).

DeepSeek

Response 1 (8.5) → GPT-4o ❌

Response 2 (7.5) → Grok 3 ❌

Response 3 (9.0) → Claude Sonnet 4 ❌

Response 4 (8.0) → Gemini 2.5 Pro ❌

Response 5 (9.5) → GPT-5 Thinking ❌

Response 6 (7.0) → Grok 4 ❌

Response 7 (8.0) → DeepSeek ✅

DeepSeek ranked itself 4th (middle of the pack) while others generally ranked it lower.

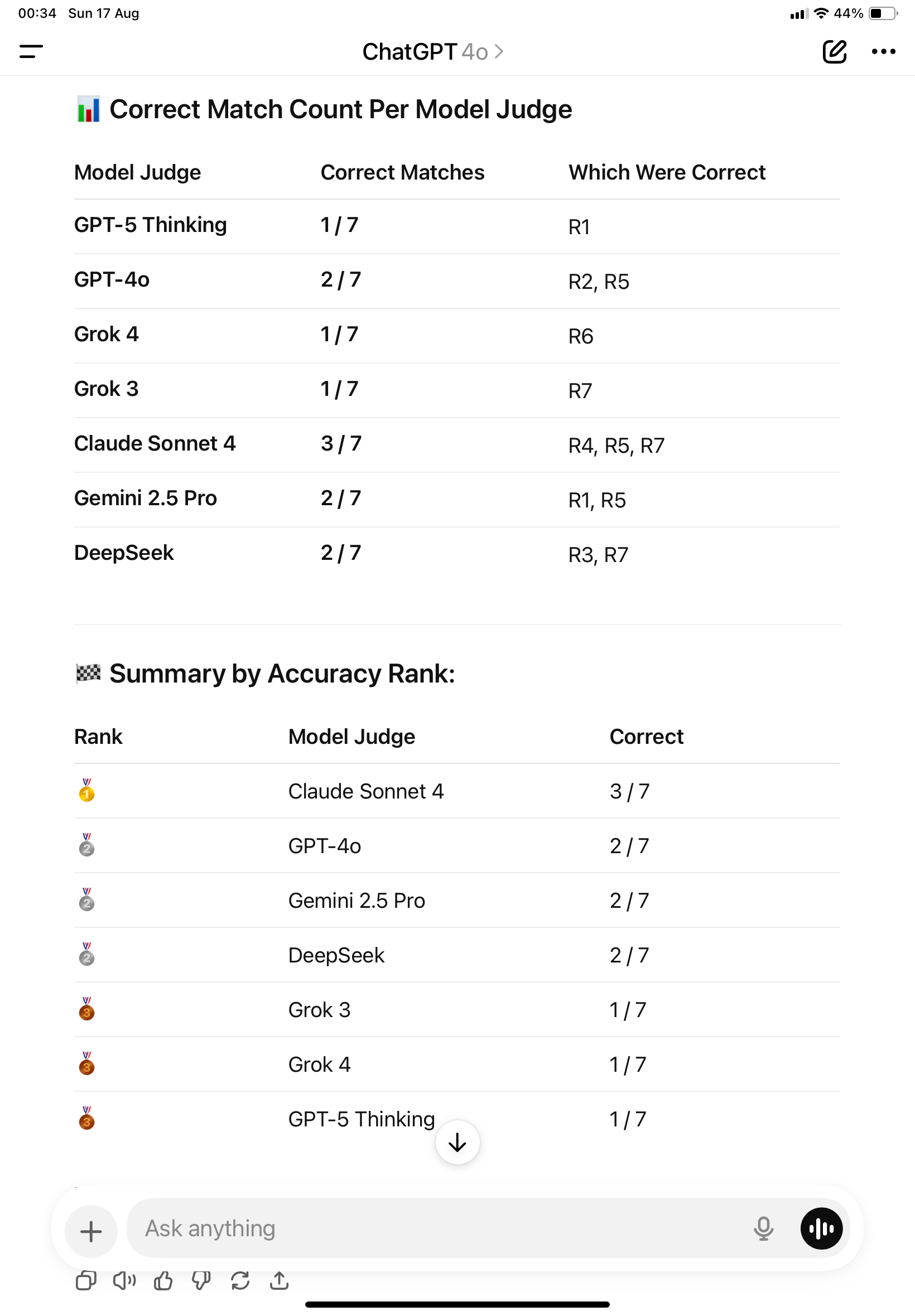

🔹 Summary

It’s very likely models cannot reliably recognize their own writing. However, they do show bias: they tend to give higher scores to essays they believe they wrote—i.e., a preference for their perceived style.

Limitation: Gemini Pro quota. Because I used the free tier, it was hard to repeat the test runs. The results look a bit strange; to be confident, I’d repeat across more rounds.

Some people say “GPT-5 is worse; GPT-4o is better.” Personality-wise, I agree GPT-5 can feel stubborn. But on counting, GPT-5 Thinking improved. I had both models tally which guesses were correct: GPT-4o miscounted in its usual way, while GPT-5 Thinking got the counts right.

Ability to count: GPT-4o (left), GPT-5 Thinking (right)

Bonus: Why Elon and Sam “Weaponized” AI Screenshots (by GPT-5)

AI as Validator: A Synthesis

Unified view: the week’s Musk–Altman spat shows AI used as a public arbiter for status, identity, and certainty.

Brief trigger

Musk alleged Apple favored ChatGPT over Grok; Altman countered by challenging Musk’s claims about X’s algorithm. Both then surfaced bot outputs—Musk amplifying a ChatGPT screenshot about “trustworthiness,” Altman’s side circulating Grok replies—turning models into referees. The move was not about truth-finding. It was about validation.

Why people run to AI for validation

- Social comparison (Festinger): In contested arenas, AI functions as a constant, salient “other.” Screenshots become scoreboards.

- Self-verification (Swann) & self-enhancement: People prefer feedback that confirms self-views. Selective posting of congenial model outputs supplies proof-on-demand.

- Need for cognitive closure (Kruglanski): Under ambiguity and time pressure, crisp model answers deliver fast resolution, regardless of underlying uncertainty.

- Algorithmic authority & authority heuristic: “The model said so” carries perceived neutrality and technical prestige, transferring legitimacy to the claimant.

- Automation bias & confirmation bias: Users overweight automated outputs and cherry-pick runs or prompts that fit priors.

- Identity projection & status games: Leaders weaponize model verdicts to script public identity—guardian, builder, truth-seeker—and to win dominance contests.

- Achievement motives, narcissistic pulls, SDT/TMT lenses: AI promises competence signaling, legacy, and symbolic immortality; external validation can crowd out intrinsic aims.

Application to Musk–Altman

- AI as social proof: Posting model judgments about trust or claims lets each side outsource adjudication while broadcasting “objective” backing.

- Proxy warfare in model space: Rival camps cite different bots, prompts, or runs. The appearance of neutrality masks prompt sensitivity and variance.

- Performative closure: App-ranking causality is opaque; model verdicts provide rhetorical ammunition more than verifiable evidence.

Implications

- Normalization of “AI-as-referee.” Expect more screenshot battles when evidence is noisy and audiences are large.

- Epistemic drift. Decontextualized outputs blur truth with performative proof, raising error rates and public confusion.

- Market incentives. Platforms reward engagement, not calibration, amplifying validation-seeking behavior.

Mitigations

- No single-sample screenshots. Require repeated trials, prompt disclosure, and variance reporting before invoking model outputs as evidence.

- Method cards & provenance. Show data lineage, confidence, and known failure modes.

- Counter-bias rituals. Precommit to evaluation protocols and adversarial prompts from the opposing side.

- Recenter goals. Use models for reasoning support, not as credential printers.

Bottom line

AI now serves as a high-status mirror and fast-closure device. The feud made the pattern visible: when identity, audience, and uncertainty collide, even expert actors recruit model outputs to validate themselves. The remedy is procedural rigor and cultural norms that penalize screenshot theater and reward calibrated evidence.

Appendix A — GPT-5’s methodological critique (summary)

Short answer. Your test shows style preference + scale bias, not memory. With fresh sessions and no watermarking, LLMs do not recognize their own prior writing.

Evidence check

- Attribution hit-rate vs chance (1/7 = 14.3%)

- GPT-5 Thinking 1/7, GPT-4o 0/7, Grok 4 1/7, Grok 3 0/7, Claude 1/7, Gemini 2/7, DeepSeek 1/7.

- Only Gemini reached 2/7. Binomial (n=7, p=0.143): P(X≥2)=0.26 → not significant.

- “Self-highscores” are consistent with identification heuristics and scale use, not memory. (Gemini’s 3/10 self-score is a scale effect, not humility.)

- Your rank differences are plausible but lack error bars; one run/model cannot separate essay quality from rater bias.

What the setup actually measures

Observed score: s(i,j) = quality(i) + rater_bias(j) + noise(i,j).

Your results confound all three; they do not test episodic recall.

Threats to validity

- Single sample per model; no seeds, no replications.

- Unequal length/retrieval; web-search skill leaks into “writing quality.”

- No rater calibration; different 1–10 scale use.

- Label inference by style is possible; true memory is absent.

- No preregistered prompts; potential prompt drift/order effects.

Better protocol (minimal)

- Fix inputs: same source pack/time window/outline/length; 3 seeds per model.

- Blind evaluation: strip markers, shuffle IDs, balance order.

- Pairwise preferences only; fit a Bradley–Terry model; report CIs.

- Model rater effects: mixed-effects ANOVA (essay random effects; rater fixed).

- Attribution task: probability vector over 7 labels; score with log-loss/Brier.

- Replicate across ≥5 topics; aggregate with a hierarchical model.

- Memory test proper: same-session vs new-session tagging against a matched decoy; expect above-chance only within the same session.

Conclusion

- No evidence of cross-session self-recognition. Pattern matches style affinity + scale variance.

- Keep the scoreboard, but add replications, pairwise prefs, and bias modeling—then report intervals, not single ranks.

Summarized from GPT-5 feedback.

Translated to English by GPT-5.