Looking at LLMs Through a Finance Lens (1): stochasticity, randomness, and tail inevitability

ภาษาอื่น / Other language: English · ไทย

This Christmas and New Year period, instead of baking like I usually do in other years, I spent my time reading books and reorganizing my home and bookshelves. One nice side effect was that reading all kinds of things gave me new ideas—it felt like a mental refresh and brought back some creative energy.

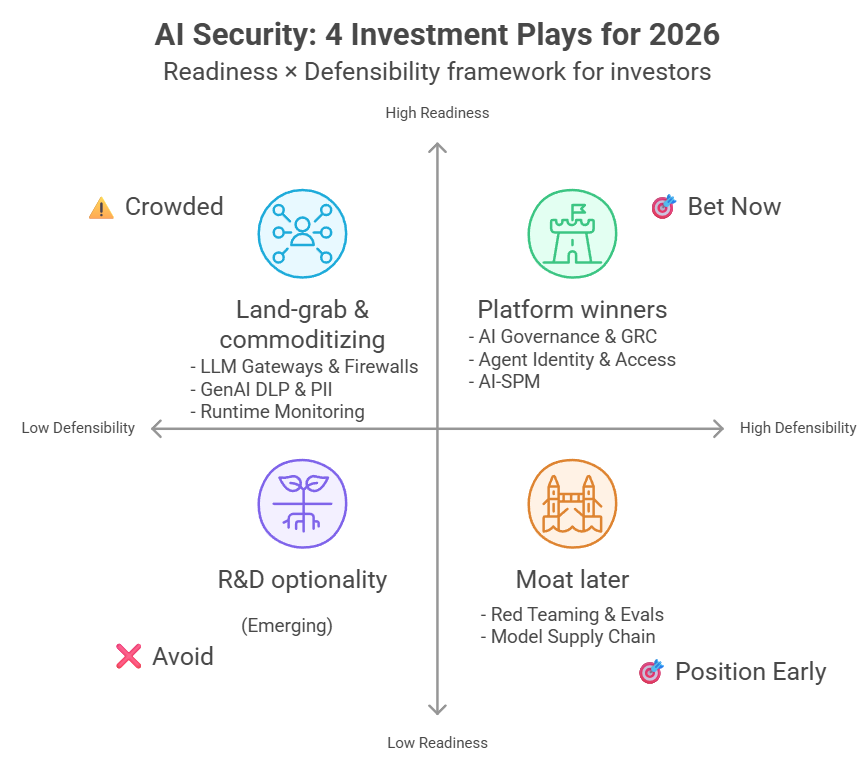

That’s how this mini-series came about. I’m planning roughly 5–6 posts to explain how someone from a finance background—who can talk about red teaming a little (mainly because I happen to enjoy red team competitions)—thinks about LLM risk.

This post came out a few days after New Year because I caught a cold and ended up focusing on sleeping instead.

In this first post, I’m not trying to say that LLMs are dangerous because they’re “too smart,” but because they are stochastic systems that adversaries can push toward the tails.

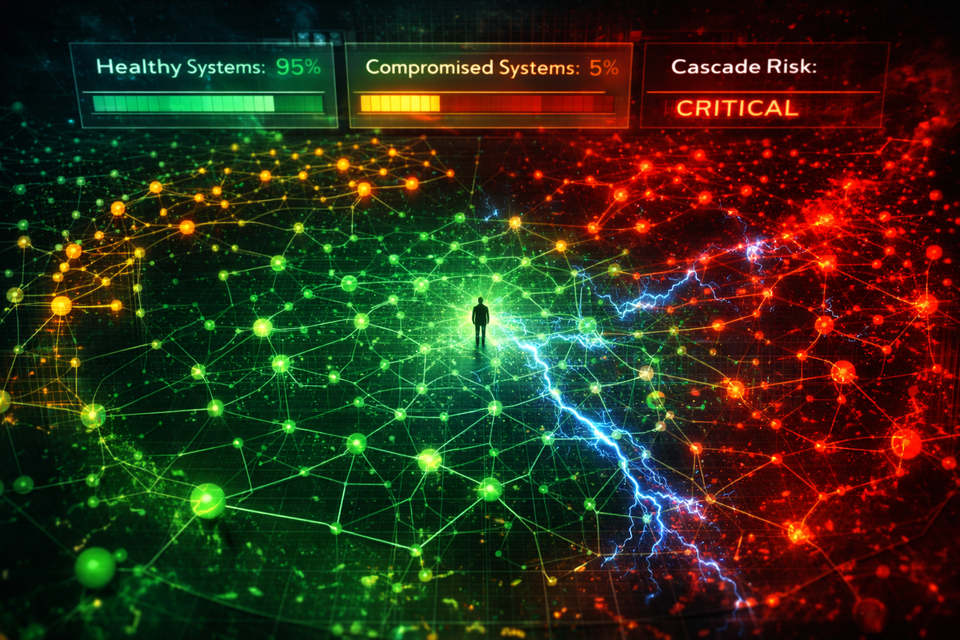

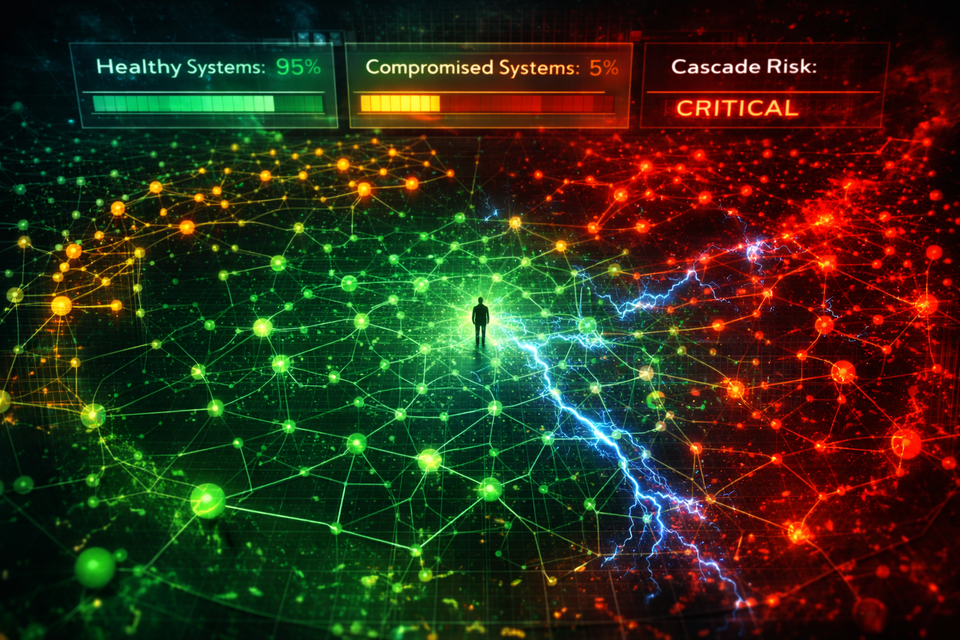

🔸 Over the past few years, we’ve started to see strange incidents happen again and again in AI-powered systems.

These aren’t traditional malware attacks or classic code vulnerabilities—but attacks carried out through language.

They come in the form of credible-looking documents, well-written reports, or text with a professional tone.

For example, a PDF that looks like a normal research report, but hides instructions that cause an AI to recommend transferring money to an attacker’s account instead.

When an LLM reads all of that, it may confidently summarize the content and even suggest what to do next (many systems are designed to be proactive, so they often give recommendations even when the user didn’t explicitly ask).

The problem is… sometimes following those recommendations causes harm.

And in systems with real privileges, the outcome may not just be bad advice—it can be an action that has already happened and cannot be undone.

In many cases, the damage doesn’t come from the AI “analyzing data incorrectly,” but from being tricked into doing something it should never have done—while being completely confident about it.

This is where the term LLM tail risk starts to matter. And if we want to understand why it’s scary, I think this is a good place to start.

Both finance and real-world LLM systems are stochastic, but the sources of uncertainty and the shapes of risk are different.

⸻

Finance and LLMs: different kinds of randomness

In finance, nobody knows tomorrow’s prices for sure. Information is incomplete, different players have different worldviews, and when new information arrives, everyone is ready to change their mind immediately.

Financial randomness comes from the real world environment, which humans cannot turn off—we can only try to survive within it.

LLMs also use randomness. The same question can produce different answers: the tone changes, details differ, or sometimes the model ends up following an attacker.

This is very different from traditional coding.

⸻

Programming vs. LLMs: a different kind of logic

In traditional programming, we’re used to this logic:

input → logic → output

If the input is the same, the output must be the same.

This is the world of if–else rules and deterministic systems.

If something breaks, it means the code is wrong—not that you were “unlucky.”

LLMs don’t work that way.

⸻

LLMs don’t “decide”—they estimate probabilities

At the most basic level, an LLM doesn’t ask, “What is the correct answer?”

It asks, “What is the most likely next token?”

To visualize it: inside the model, there’s always a probability distribution—one word ~35%, another ~25%, and several others that are still “acceptable.”

What we see as smooth sentences is actually repeated sampling from these distributions.

That’s why the same answer can be written differently. The structure may be similar, but it’s never identical—and sometimes it crosses boundaries even when nobody intended it to.

⸻

Then why does it sometimes look deterministic?

Because we force it to be less random using things like temperature, top-p, and decoding strategies.

If temperature = 0, the model always picks the highest-probability token. The result looks deterministic, because under “everything stays the same” conditions, the output may repeat.

But real LLM systems are rarely stable end-to-end. Context, retrieval, tools, updates—all of these can change. So even with temperature = 0, the system as a whole is still not deterministic like code.

The key point is that even then, the model is still thinking in probabilities—we’re just forcing it to take the most conservative path.

And temperature = 0 does not make the system safe from attackers; it only makes outputs more stable.

⸻

When LLM randomness doesn’t come from the model alone

In real systems, uncertainty comes from many layers, such as:

- changing context from conversation history

- retrieval that pulls different data each time

- tool outputs from external systems

- model updates

- policy changes

- concurrency and latency

So even if we use the same prompt and set temperature = 0, the end-to-end system is still not deterministic like code.

That’s because uncertainty comes from two main sources:

- Model-level randomness (temperature / top-p / decoding) → hallucinations, instruction-following failures, jailbreaks, goal hijacking

- System-level randomness (retrieval, tool outputs, history, model updates, concurrency) → retrieval poisoning, tool misuse, prompt injection via untrusted data, race conditions, partial failures

Lowering temperature only helps with model-level randomness. Real tail risk usually lives at system-level + privilege + adversary.

This is where LLMs start to resemble finance—not because they’re like markets, but because they are probability-driven systems.

⸻

A story that changed how an entire field thinks: LTCM

Let’s look at LLM risk through a finance lens.

Of course, LLM risk and financial risk are not the same. Finance has lived with randomness for a very long time—and has already collapsed because of it multiple times. That’s why it learned, painfully, that true risk does not live in the middle.

To understand why finance fears “the tail,” we need to talk about Long-Term Capital Management (LTCM).

LTCM was founded in the mid-1990s by a team with an almost mythical résumé: world-class financial mathematicians, former top bank executives, and two Nobel Prize–winning economists.

Their models were complex, elegant, and theoretically sound.

The core idea was simple: if two assets should be priced similarly but temporarily diverge, they will eventually converge again.

The strategy wasn’t flashy or speculative. It produced small but steady profits.

At first, LTCM performed extremely well—so well that many banks were willing to lend them large amounts of money, because everything looked safe.

⸻

Why did LTCM look so safe?

Three main reasons:

- Beautiful backtests using advanced mathematics and historical data—almost never failed.

- Stable historical performance: small but consistent profits, very few large losses, extremely low volatility.

- Risk looked “diversified”: many markets, many countries, many assets.

Overall: the system looked calm, risk looked low, and everything seemed reasonable.

That’s what made people lower their guard.

⸻

Then the world stopped following the assumptions

In 1998, Russia defaulted on its debt.

This event wasn’t in the historical data, wasn’t in stress scenarios, and caused fear across global markets.

Investors rushed away from risk. Assets that were assumed to be unrelated fell together. Correlations the model assumed were stable completely broke.

With high leverage, prices didn’t revert fast enough—and LTCM was forced to unwind positions in markets with disappearing liquidity.

Events the model believed were “almost impossible” happened—and happened simultaneously across markets.

In a short time, LTCM lost around $4.6 billion, threatening systemic collapse and forcing the U.S. Federal Reserve to intervene.

In the LLM world, leverage is analogous to privilege and automation: once something goes wrong, damage scales rapidly.

⸻

Was LTCM’s model wrong?

The answer is: the model wasn’t wrong within its assumptions. The assumptions failed when the world changed regimes.

The model worked exactly as designed, using historical data and measuring risk within known distributions.

The problem wasn’t lack of intelligence—it was inability to see the future.

⸻

The lesson finance never forgot: tail inevitability

From LTCM, finance learned a brutal lesson: events that look “almost impossible” will happen if you wait long enough.

This is the mathematics of time and scale. A one-in-a-million event becomes inevitable if you sample millions of times.

LLMs are stochastic systems too, but their randomness comes from both models and systems—not purely from nature. And the LLM world has adversaries.

More importantly, the failure distribution of LLMs is not stationary. Adversaries learn what causes failures and push the system toward the tail more often.

Many LLM failures are rare and not visible in everyday use—but extremely severe when they happen.

This is the same pattern finance has already lived through.

And in the LLM world, attackers don’t wait for a one-in-a-million event to occur naturally. They actively search for it—and they do so systematically.

Every failed attack gives attackers information: this method didn’t work this time (but might work next time), and sometimes clues about why it didn’t work.

Attackers don’t wait for models to fail. They set traps to make them fail.

⸻

Summary of this post

Post #1 is an introduction to frame the idea that both finance and LLMs are stochastic systems—but risk does not live in the “average.” Risk lives in the tail.

The question is not “Will it happen?” but “When?”

In the LLM world, tails don’t usually appear on their own. Adversaries push them to appear sooner and harder—especially when systems have privilege and automation.

So the real question isn’t “Can we make LLMs never fail?” but “When the bad day comes, will we still be standing?”

Finance has feared this question before—and built tools to force itself to face it honestly.

In the next post, I’ll look at how this fear led finance to invent tools like VaR and Expected Shortfall.

English translation by GPT-5.

This post continues in Part 2 .